As more and more enterprises have carried out application containerization, the operating environment for application workloads has become increasingly complex. Nearly 60% of enterprises are already using or planning to deploy both virtual machines (VMs) and containers to meet the diverse performance and agility requirements of their applications.*

* Data is retrieved from “Kubernetes and Cloud Native Operations Report 2022”, Juju.

However, as virtualization and container platforms are often provided by different vendors and require distinct O&M skills, many IT personnel face challenges in achieving unified resource orchestration and efficient management across both environments.

To better support mixed workloads of VM and container, SmartX introduces the VM-Container Converged Infrastructure (VCCI), leveraging its full-stack hyperconvergence capabilities. This unified architecture encompasses server virtualization, distributed storage, networking and security, and container management. It enables users to centrally manage foundational resources, enhancing interconnectivity between virtualized and containerized applications and resource utilization.

Various challenges posed by mixed workloads of VM and container

Currently, although there is a growing trend for enterprises to deploy new business applications on containers (about 75%), many application systems still need to run on VMs. There are three major contributing factors:

- Users often find it difficult to completely rebuild their legacy systems due to their outdated technology stack and architecture. As a result, these systems are typically maintained in a virtualized environment to ensure performance and stability.

- Not all applications are suitable for containerization. For example:

- Applications with strict demands for high performance and low latency.

- Applications with infrequent business changes.

- Some stateful applications that would significantly increase O&M complexity after containerization.

- Applications sensitive to security isolation.

- Applications inherently suited to VMs, such as Gitlab and Harbor.

- Emerging generative AI models cannot be completely containerized in some fine-tuning and inference use cases.

Therefore, users still need to run some applications on VMs alongside containers. When managing these two environments, many O&M personnel may feel fragmented:

- Fragmented O&M: Virtualization and container platforms are typically provided by different vendors. Users need to manage distinct platforms, which hinders the efficient and unified O&M across environments. Moreover, as VMs and containers require different O&M skills, IT personnel need to master knowledge and skills for both environments.

- Fragmented application interoperability: The components of an application system can be separately deployed on VMs and containers. However, due to the difficulties of network interconnection between virtualization and container environments, these components can become isolated from each other and fail to meet business demands. Additionally, it is difficult for users to implement fine-grained controls over container network traffic as easily as they can with VMs.

- Fragmented resource provisioning: Traditionally, VMs and containers independently schedule their required computing and storage resources, leading to lower resource utilization and slower delivery speeds. This becomes particularly challenging in AI application scenarios where resource demands fluctuate frequently and require diverse data storage. Therefore, IT infrastructure should support flexible management of CPU & GPU resources and storage resources in both environments, catering to the diverse storage needs of different data types.

Existing Solutions for Converged Deployment of Container and VM: Pros and Cons

To address these challenges, the mainstream approach involves deploying containers and VMs in a converged manner, relying on underlying IT infrastructure for unified resource provisioning. According to Gartner’s Market Guide for Server Virtualization (2023), there are currently two prominent converged solutions:

- Container-centric (emerging approach of containerized virtualization):

- VM in Container: With solutions like KubeVirt, VMs can be created, run, and managed within a Kubernetes cluster. Kubernetes operates directly on bare metal, allowing users to collaboratively manage VMs and containers through the Kubernetes API, thereby reducing learning costs.

- Container in Lightweight VM: This approach involves launching OCI-compliant containers within VMs, leveraging the benefits of virtualization. It enhances security isolation for containers while keeping to be lightweight and fast-startup. Key technologies include Kata, gVisor, and Firecracker. However, these solutions are more suitable for serverless computing, and not optimal for traditional VM-based applications.

- Coexist (traditional approach of virtualized containers): This approach, for example, VMware Tanzu and SmartX SKS, supports deploying, running, and managing containers and VMs in the virtualized environment. It allows users to run VMs on virtualization or hyperconvergence platforms, and create Kubernetes clusters on selected VMs. Users can centrally manage Kubernetes clusters and VMs through a unified GUI.

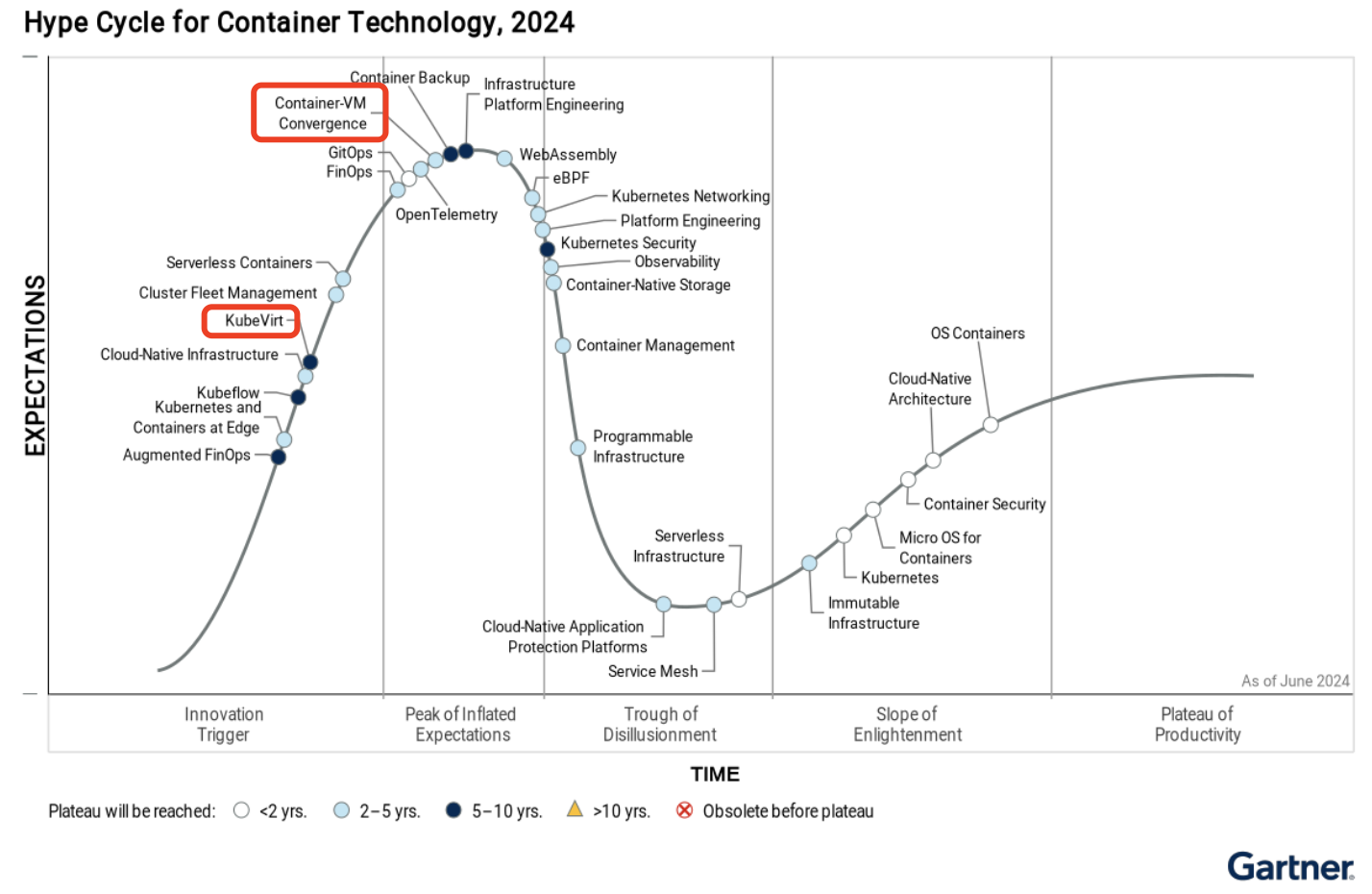

According to Gartner’s Hype Cycle for Container Technology, 2024, the two Container-centric approaches are still immature and expected to take 5 years to get fully adopted by the market. At present, these solutions are more suitable for enterprises with professional technicians to deploy in test environments.

In contrast, depending on virtualization and hyperconvergence, the Coexist solution is more reliable. It also offers benefits such as a higher level of infrastructure convergence, a broader array of advanced virtualization features and device support, and better storage stability and performance. Therefore, the Coexist approach is more appropriate for current adoption to integrate virtualization and container deployment.

Currently, some container platform vendors, virtualization/hyperconvergence vendors, and public cloud vendors offer solutions for converging container and VM based on the Coexist approach. We compare the features and advantages of solutions provided by different vendors in the chart below. It is recommended that users should carefully evaluate the functions of each product, and choose an end-to-end solution that features a mature technology stack, high-level integration of containers and VMs, an open ecosystem, and ease of use.

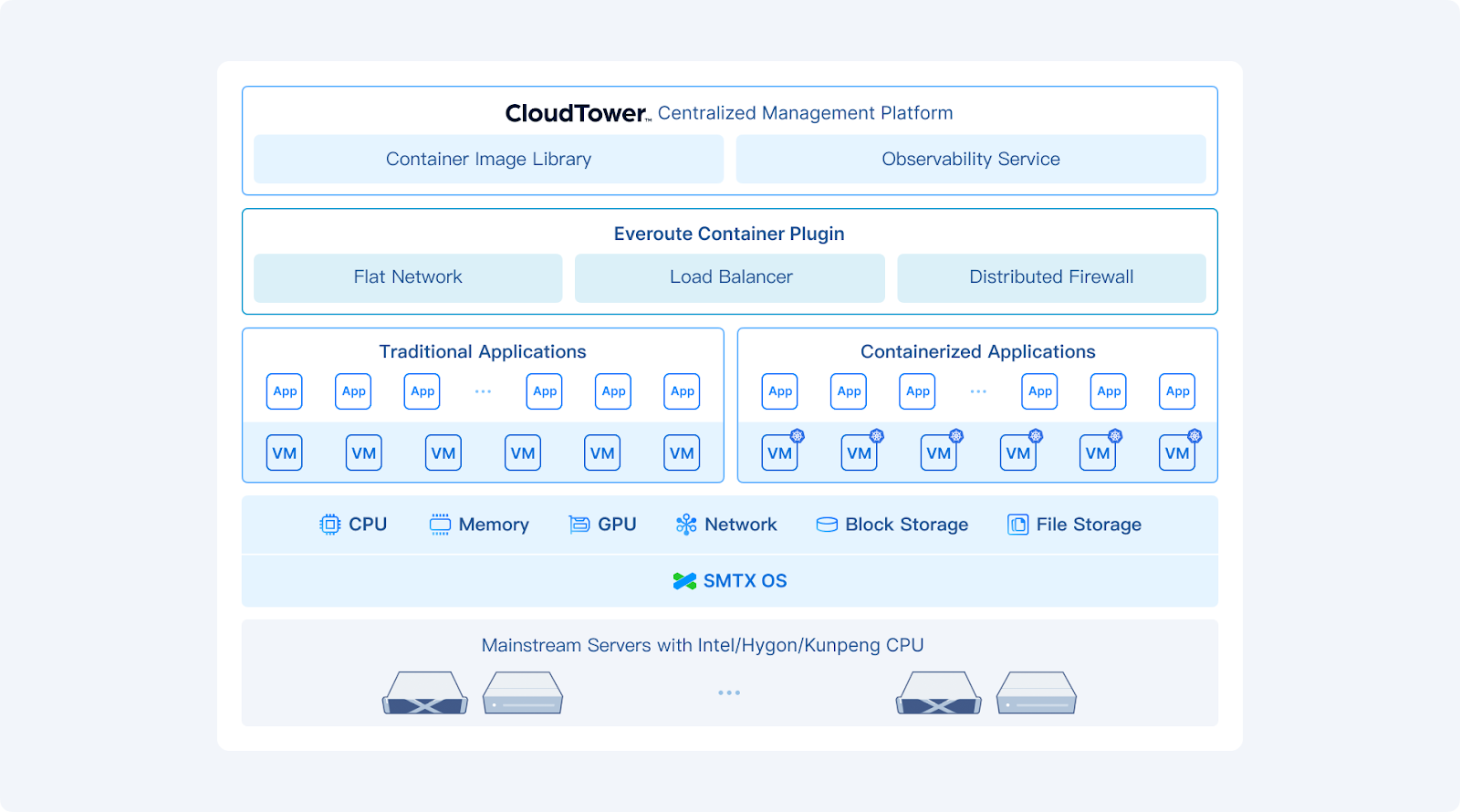

SmartX VCCI Solution

Based on the Coexist approach, SmartX VM-Container Converged Infrastructure (VCCI) allows enterprise users to support both virtualization and container application through a converged architecture. It comprises SmartX hyperconverged software SMTX OS (which includes built-in native hypervisor – ELF and distributed storage – ZBS), SMTX Kubernetes Service (SKS), software-defined network and security product – Everoute, and the multi-cluster management platform – CloudTower. It enables users to centrally manage foundational resources, simplifies VM and container network interconnectivity, and enhances resource utilization.

Unified management of IT infrastructure

Leveraging server virtualization, distributed storage, container management, and network-related components provided by SmartX full-stack hyperconvergence software, users can build an integrated IT infrastructure converging VMs and containers. VCCI can be managed and maintained through a unified management platform, significantly reducing users’ O&M burden.

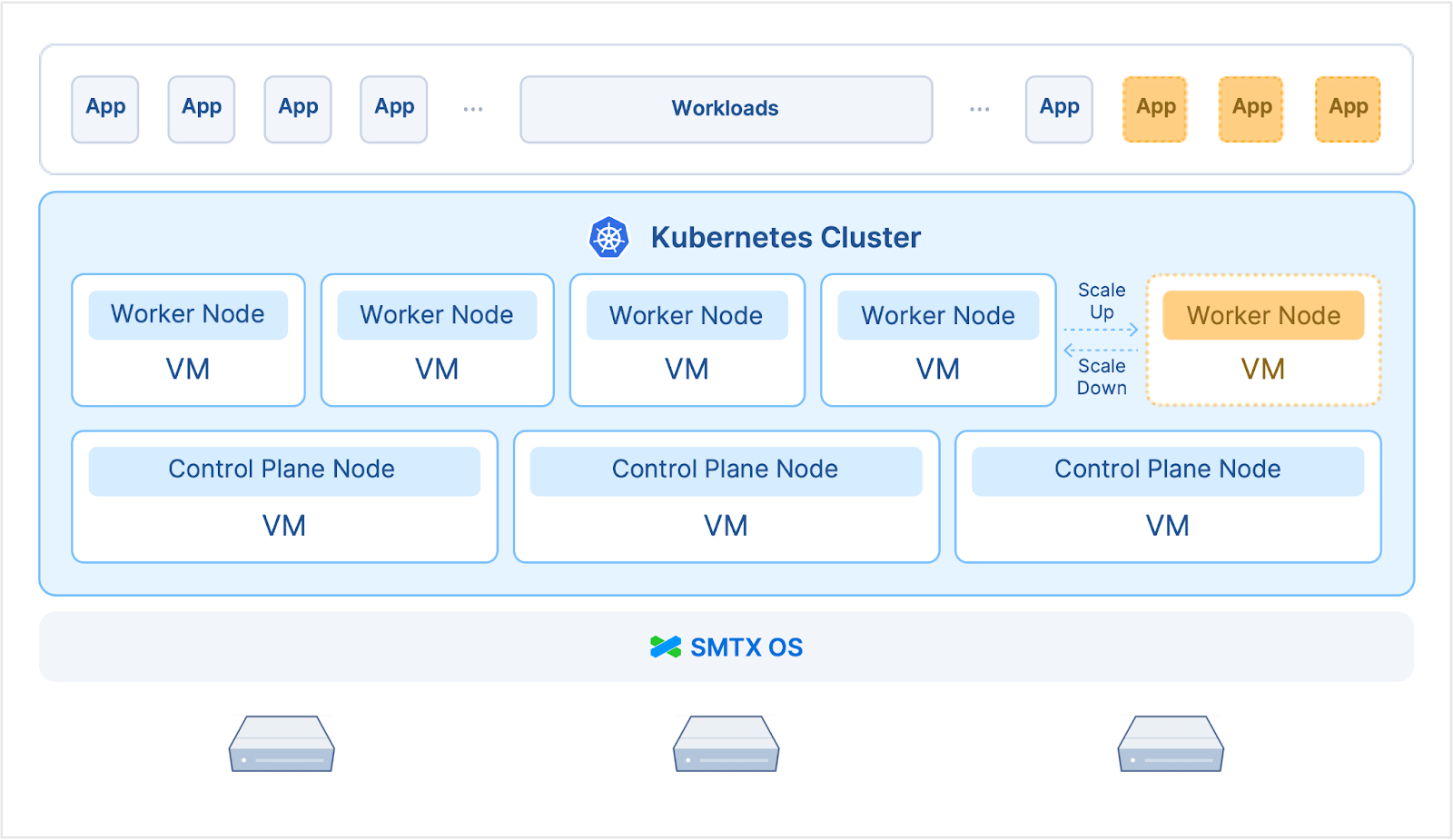

VM features enhance the delivery speed, flexibility, and reliability of Kubernetes clusters

VCCI enhances the delivery speed of Kubernetes cluster by enabling the creation of Kubernetes clusters in minutes without manual operations such as resource preparation and operating system installation. These Kubernetes clusters are also elastically scalable, supporting automatic detection of cluster resource usage and triggering horizontal scaling of nodes.

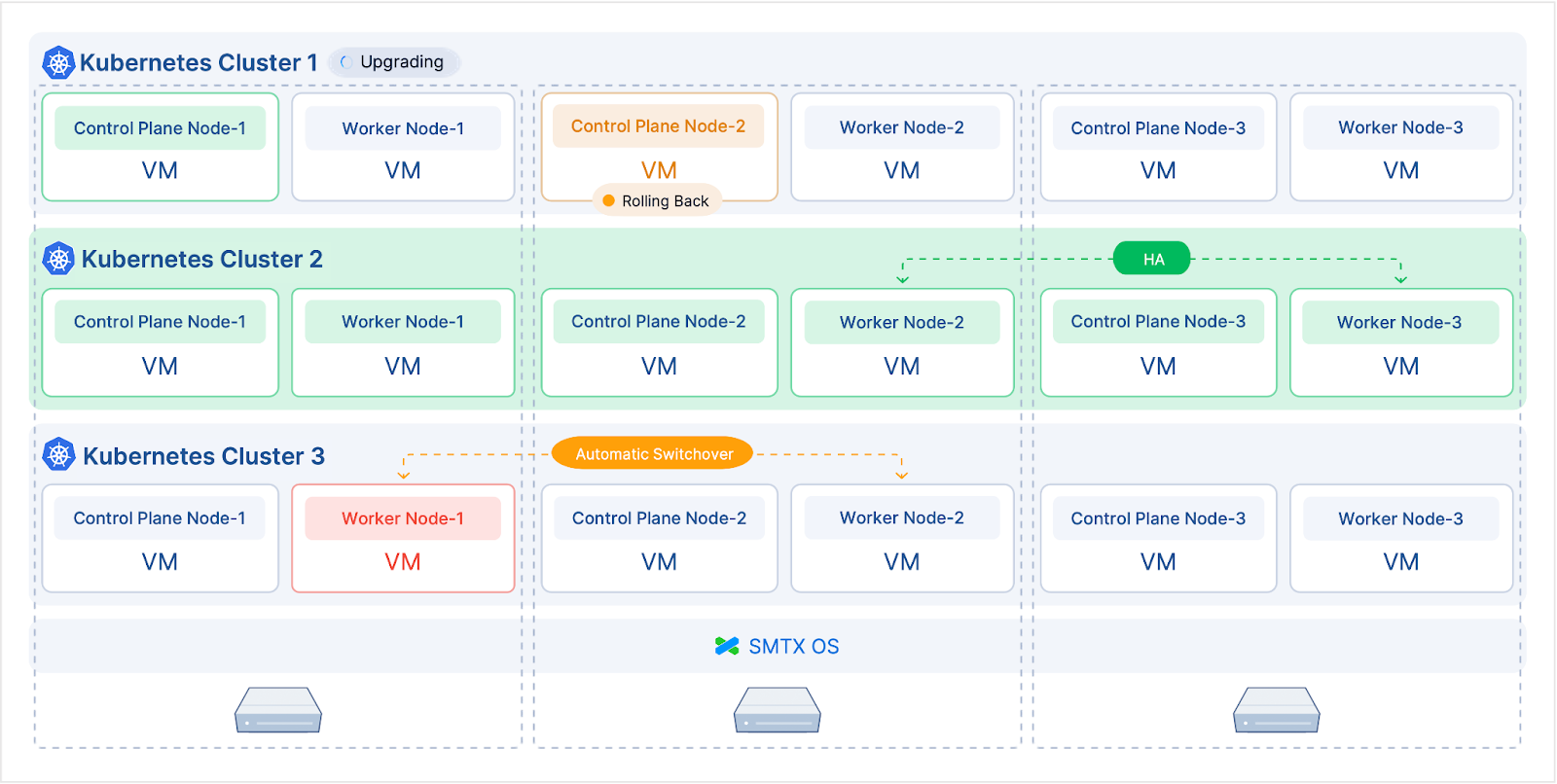

In addition to Kubernetes‘ built-in capabilities, virtualization’s high availability features further enhance the reliability of the entire architecture:

- Kubernetes clusters‘ Control Plane nodes are placed on different physical hosts to ensure that a physical host failure will not cause multiple Control Plane failures within the same Kubernetes cluster, maintaining cluster availability.

- Kubernetes VM nodes support automatic migration and restarting on other healthy nodes in case of physical host failure.

- Kubernetes VM nodes can be manually or automatically replaced when they fail.

- Support cluster rolling upgrade, as well as the ability to roll back in case of an upgrade failure.

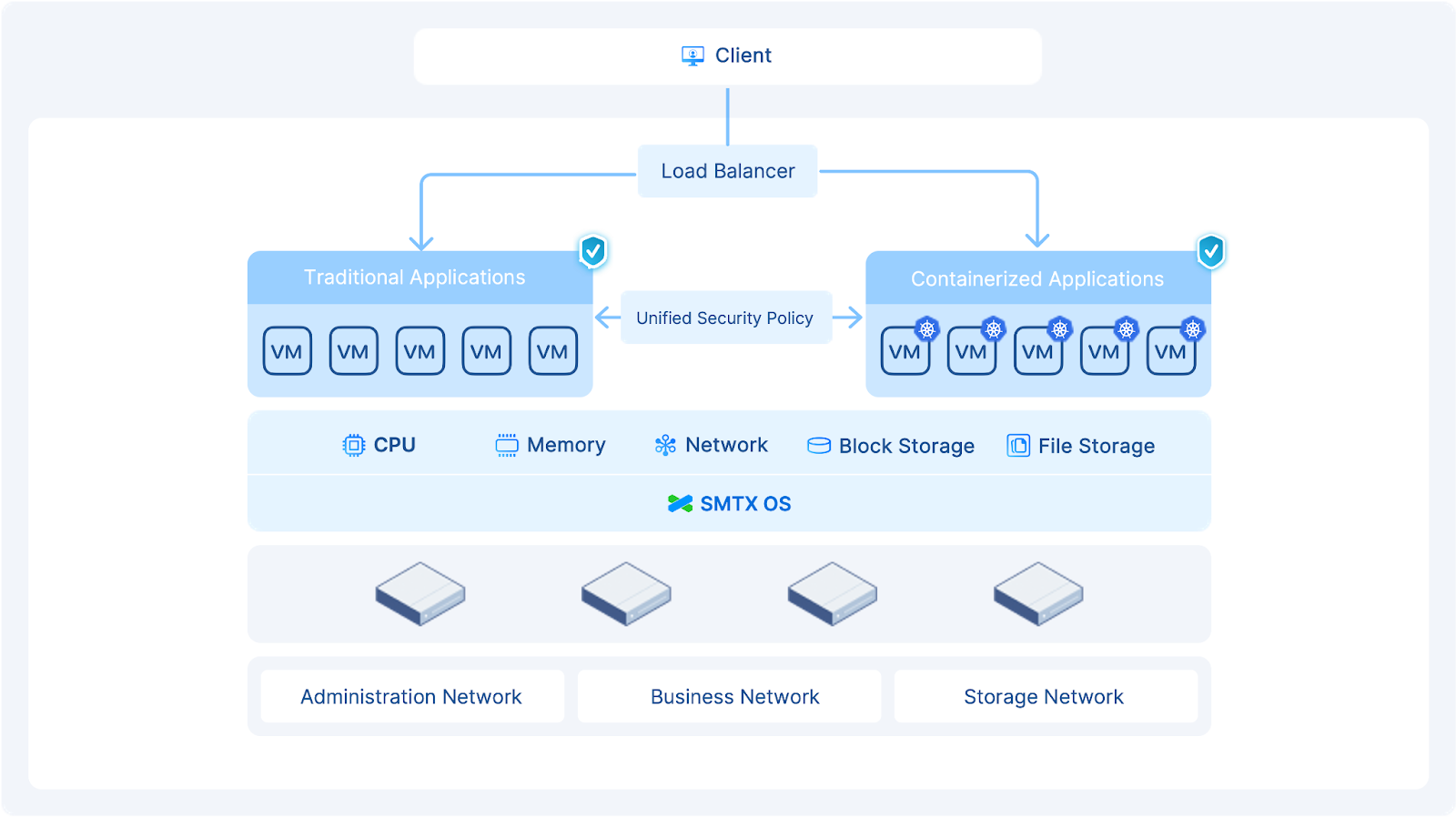

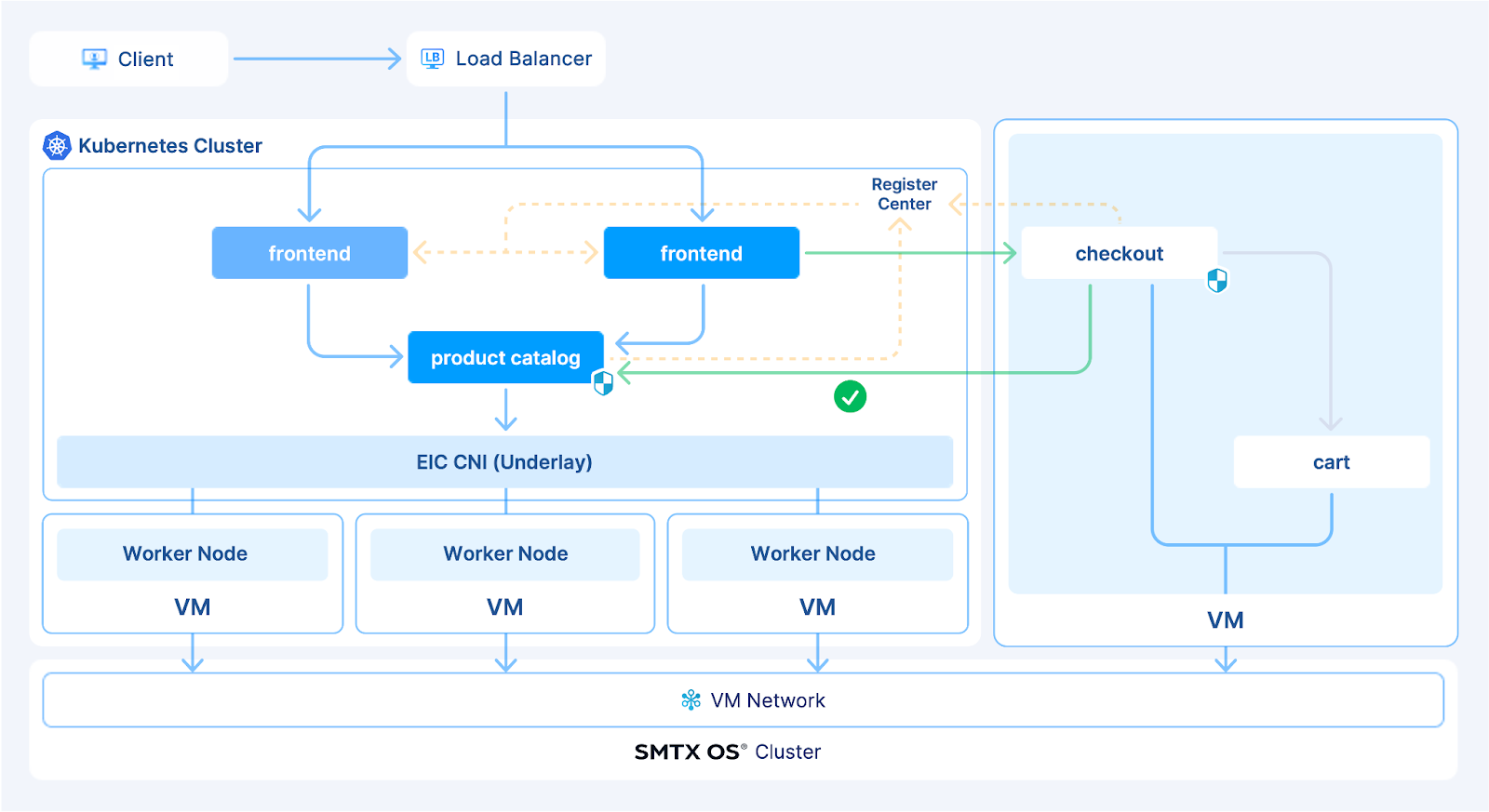

Interconnection between virtualized and containerized applications

Leveraging Everoute and EIC plug-ins, VCCI provides a flat network architecture for both virtualized and container environments, enabling interoperability between applications on VMs and containers. It also allows users to collaboratively manage network security policies in a smaller granularity for application components in both environments. This improves access efficiency and enhances the security of east-west traffic in both environments.

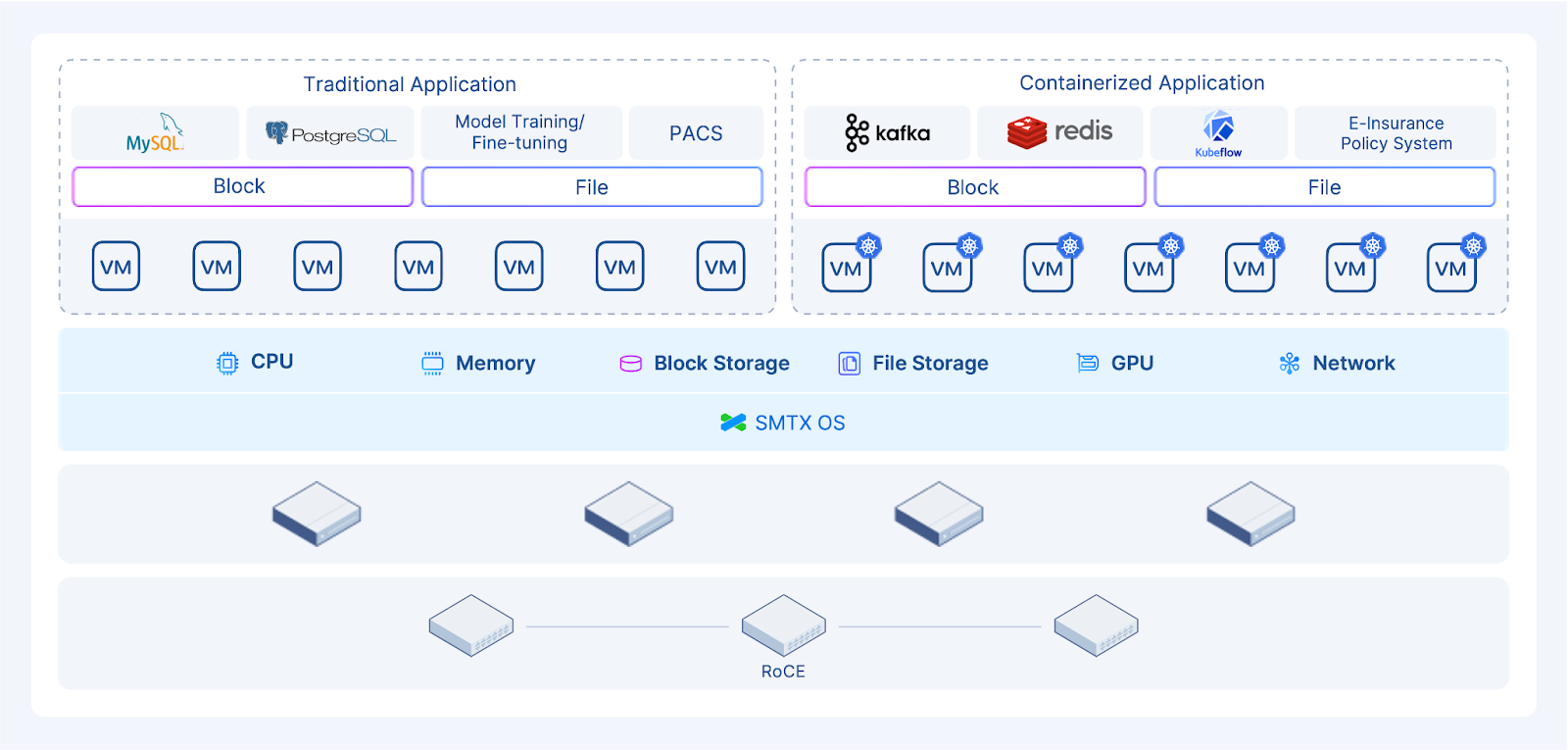

Addressing diverse data storage needs

SmartX’s independently developed distributed storage can provide stable, high-performance storage services for both virtualized and containerized applications. It also supports a variety of data types, such as blocks and files, addressing diverse applications’ data storage needs. SmartX’s distributed storage is also compatible with multiple hardware devices, ranging from hard disk with better cost-efficiency to NVMe storage devices with higher performance.

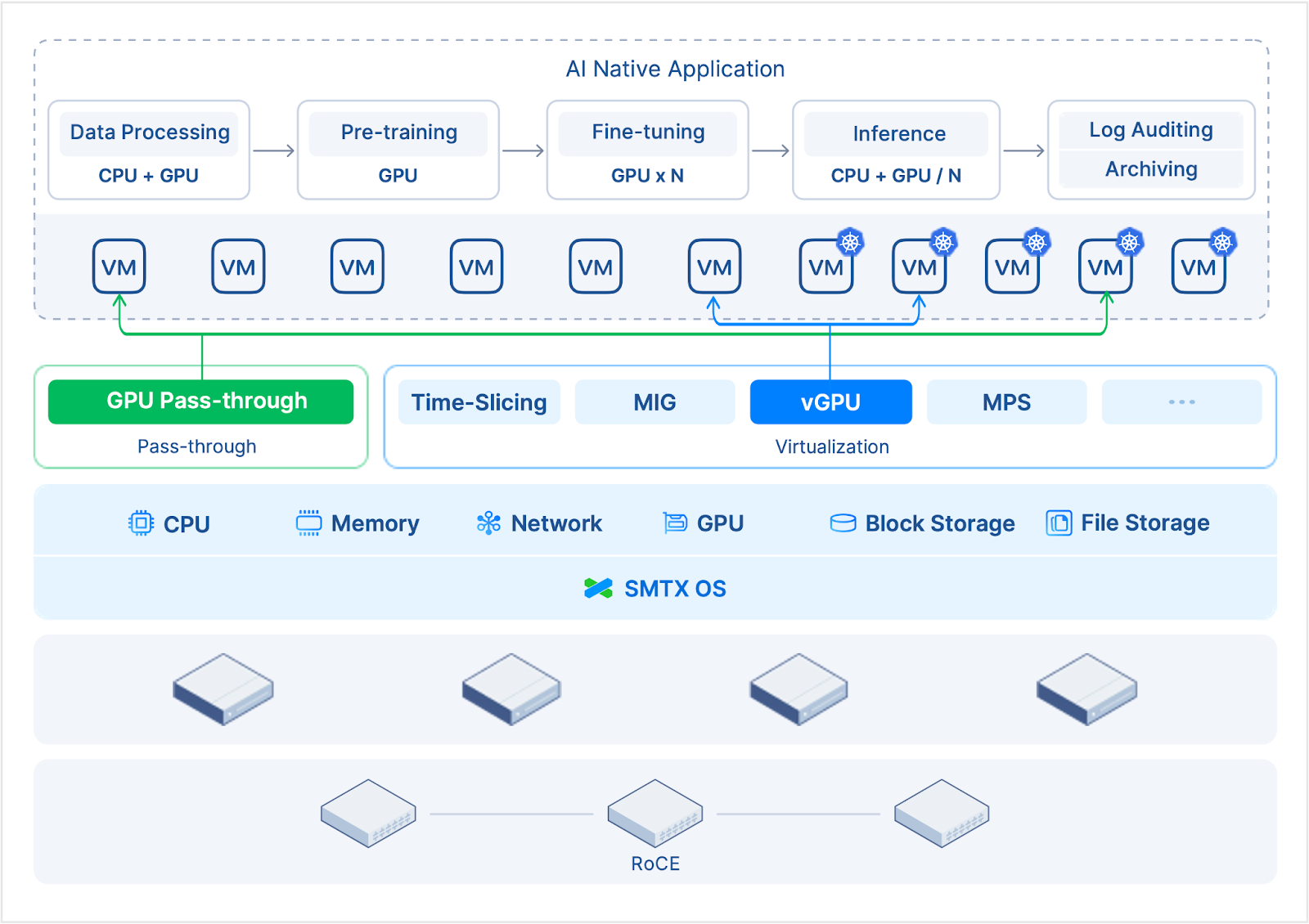

Accelerating application resource delivery

The converged deployment of VMs and containers supports a wide range of AI/ML models, allowing effective allocation of CPU and GPU resources to meet AI applications’ varying requirements in performance, security, scalability, agility, and more. This approach enhances resource utilization and reduces overall costs. Additionally, VCCI’s GPU sharing scheme and DRS features can further improve resource utilization.

Advantages

- Ease of use: Achieve a unified management of VMs and containers with one set of IT infrastructure. Simple and easy to use, with low O&M burden.

- Time-saving: Rapid platform deployment. Fast delivery of Kubernetes clusters. Quickly upgrade applications. Enhance delivery efficiency.

- High stability: Stable and reliable platform with outstanding performance, improving application reliability and ensuring business continuity.

- Cost-saving: Start up with a minimum of three nodes, with on-demand scalability. Unified infrastructure management improves resource utilization.

References:

1. Kubernetes and cloud native operations report 2022, Juju

https://juju.is/cloud-native-kubernetes-usage-report-2022

2. Software-Defined Computing Software Market Biannual Tracking Report (2024), IDC

https://www.idc.com/getdoc.jsp?containerId=prCHC52339224

3. Market Guide for Server Virtualization (2023), Gartner

https://www.gartner.com/document/4400899

4. Hype Cycle for Container Technology, 2023, Gartner