“Global warming isn’t a prediction. It is happening.” In August, the United Nations Intergovernmental Panel on Climate Change (IPCC) released the first chapter of its Sixth Assessment Report (AR6), announcing global warming is soon expected to breach the 1.5C threshold.

In response to global climate change, the UN has set carbon neutrality goals by 2050, followed by numerous countries/nations such as China (by 2060), Canada (by 2050), Germany (by 2045), United States (by 2050), United Kingdom (by 2050), etc.

Carbon neutrality is a state of net-zero carbon dioxide emissions. This can be achieved by balancing emissions of carbon dioxide with its removal (often through carbon offsetting) or by eliminating emissions from society (the transition to the “post-carbon economy”).

Low-carbon data center

Carbon neutrality is a far-reaching systemic project that requires the participation of the whole world, and all sectors of society.

As an essential key infrastructure for economic and social operation, data centers are unquestionably power-hungry.

For example, in the past decade, the overall electricity consumption of China’s data centers has been increasing at an annual rate of more than 10%, exceeding 200 billion kWh in 2020, accounting for about 2.71% of the electricity consumption of the whole society.

Thermal power accounts for a large proportion of data center’s total electric power consumption, generating a relatively large amount of greenhouse gases and other pollutants.

Besides saving energy as much as possible, improving energy efficiency is also a must for data centers. Power Usage Effectiveness (PUE) is an important indicator to measure data centers’ energy efficiency. The closer the PUE is to 1, the higher power usage effectiveness of the data center is.

A two-pronged approach

The major measures for reducing data centers’ carbon emissions cover two aspects, namely IT and non-IT infrastructures.

For non-IT infrastructure, common measures include:

- siting data centers close to locations with green and clean energy;

- using renewable energy as much as possible;

- applying liquid cooling technology instead of fan cooling;

- recycling and reusing the waste heat from data centers.

The most effective way among those is to use 100% renewable energy in data centers and all company facilities, but it is not easy, as it took Apple five years to achieve the goal of using 100% renewable energy in all of its global facilities.

For IT infrastructure, there are a number of initiatives that companies can implement immediately to improve energy efficiency:

- minimize idle IT equipment by connecting zombie servers using distributed and virtualization technologies;

- using virtualization and storage pools to significantly improve hardware utilization;

- properly manage the power consumption of chips by employing more energy-efficient chips and utilizing the adaptive power management features on chips.

Among them, virtualization and hyper-converged infrastructure (HCI) are expected to be the leading forces in improving data centers’ energy efficiency.

Virtualization is very common. HCI has also become mainstream in recent years. As a converged and unified IT infrastructure, hyper-convergence encompasses the common elements of a data center: compute, storage, networking, and management tools. Hyper-convergence is software-centric, replacing the dedicated hardware in traditional architecture with the hardware integrated with x86 or ARM architecture, thus solving the problems of complex management and difficulty in scaling faced by traditional architectures.

Compared with traditional architecture, hyper-convergence collapses the architecture from three layers to two layers, which not only greatly saves space in the server room, but also further integrates compute resources, thus enhancing the energy efficiency of the server room.

The HCI comes with compute virtualization and distributed storage that substitute the traditional physical and virtual environments, causing significant impact on reducing data centers’ carbon emissions.

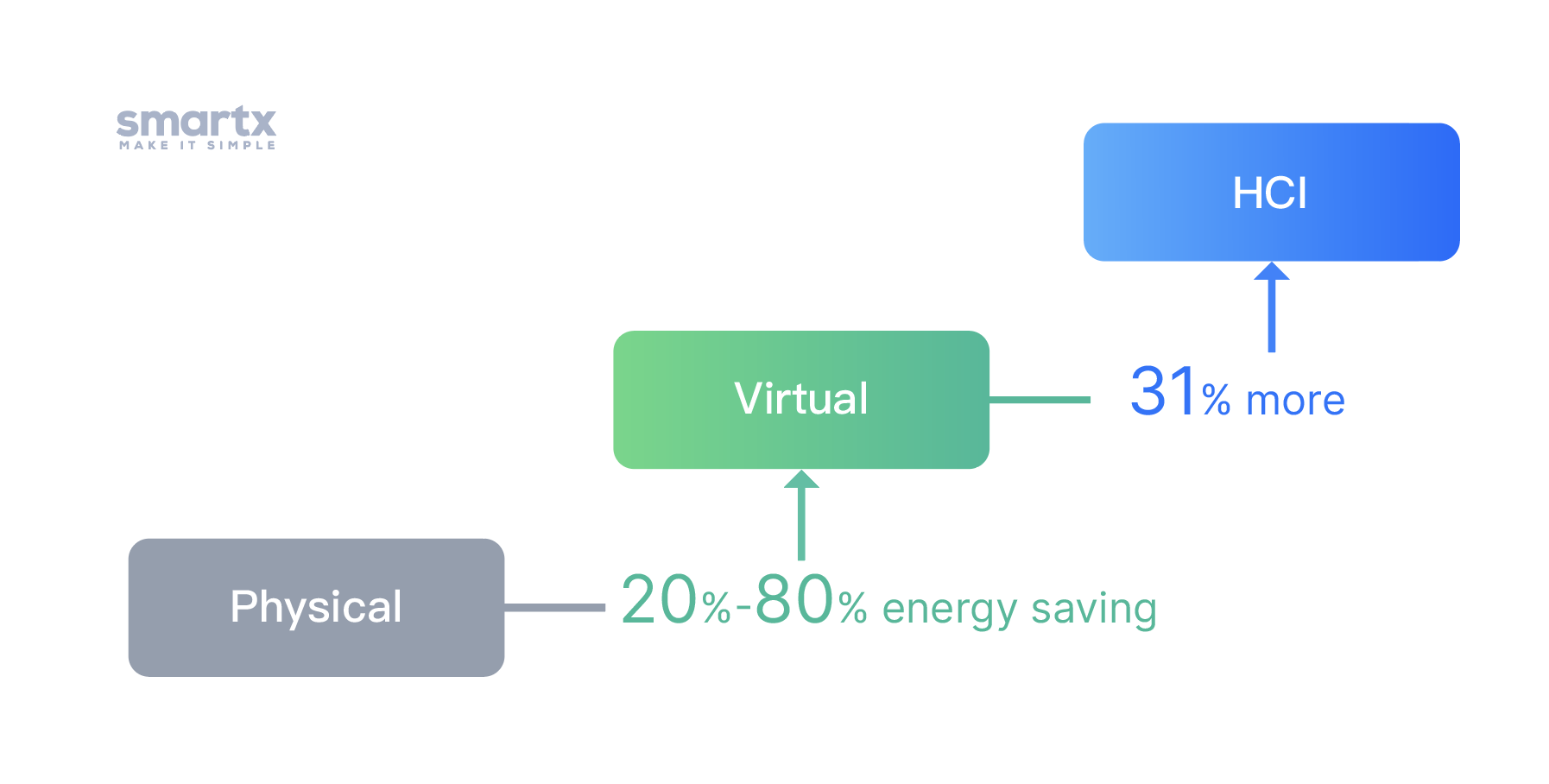

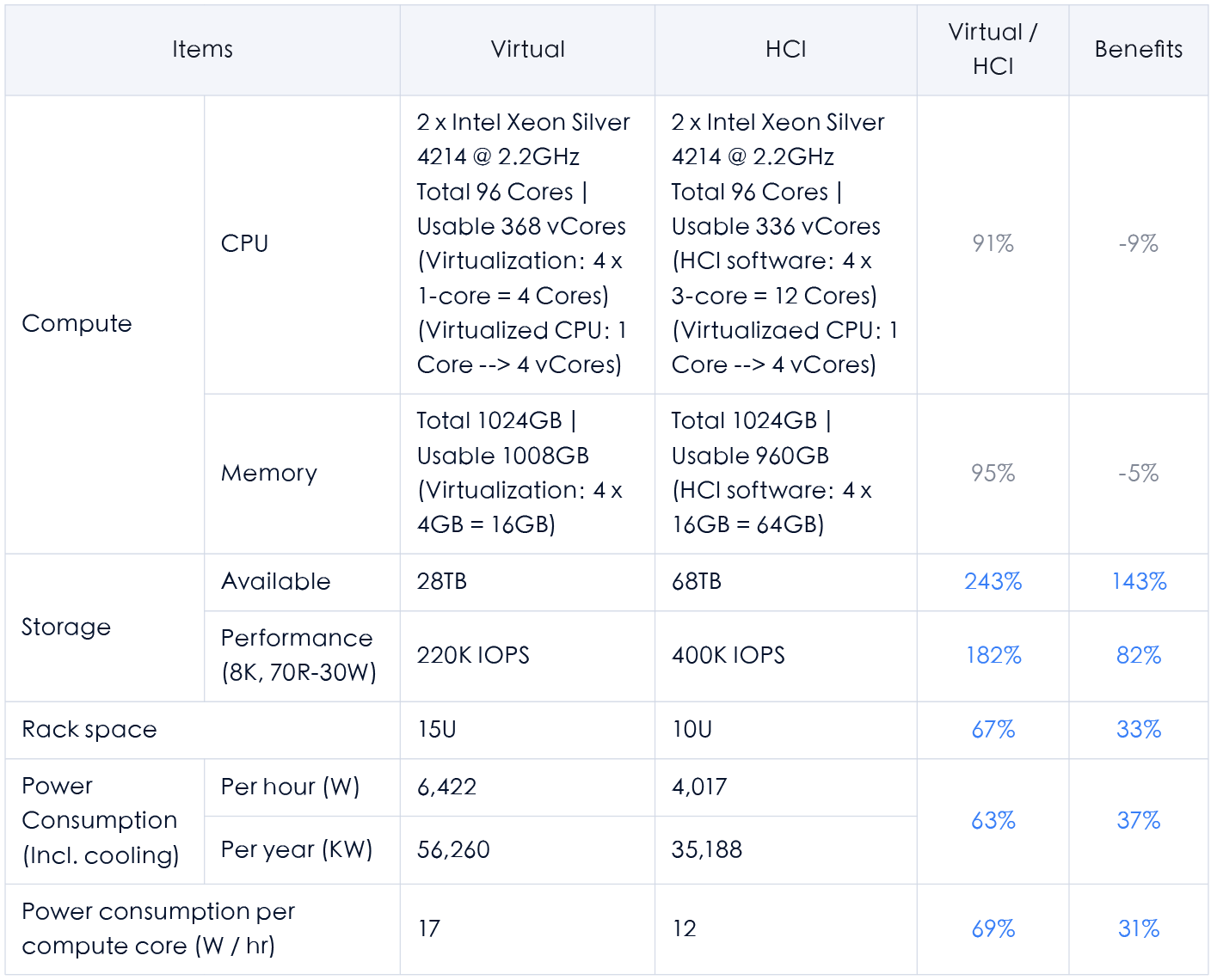

A comparative calculation based on generic scenarios shows that, after the transition from a traditional physical environment to a traditional virtual environment, the virtualization layer alone can save 20–80% energy, and the transition from a traditional virtual environment to HCI can further save up to 31% energy by integrating distributed storage into compute. Below are the detailed calculations.

(Note: The following calculations are theoretical values. The energy consumption of physical servers varies under different load conditions and different servers perform differently. Factors such as switches are also not taken into consideration.)

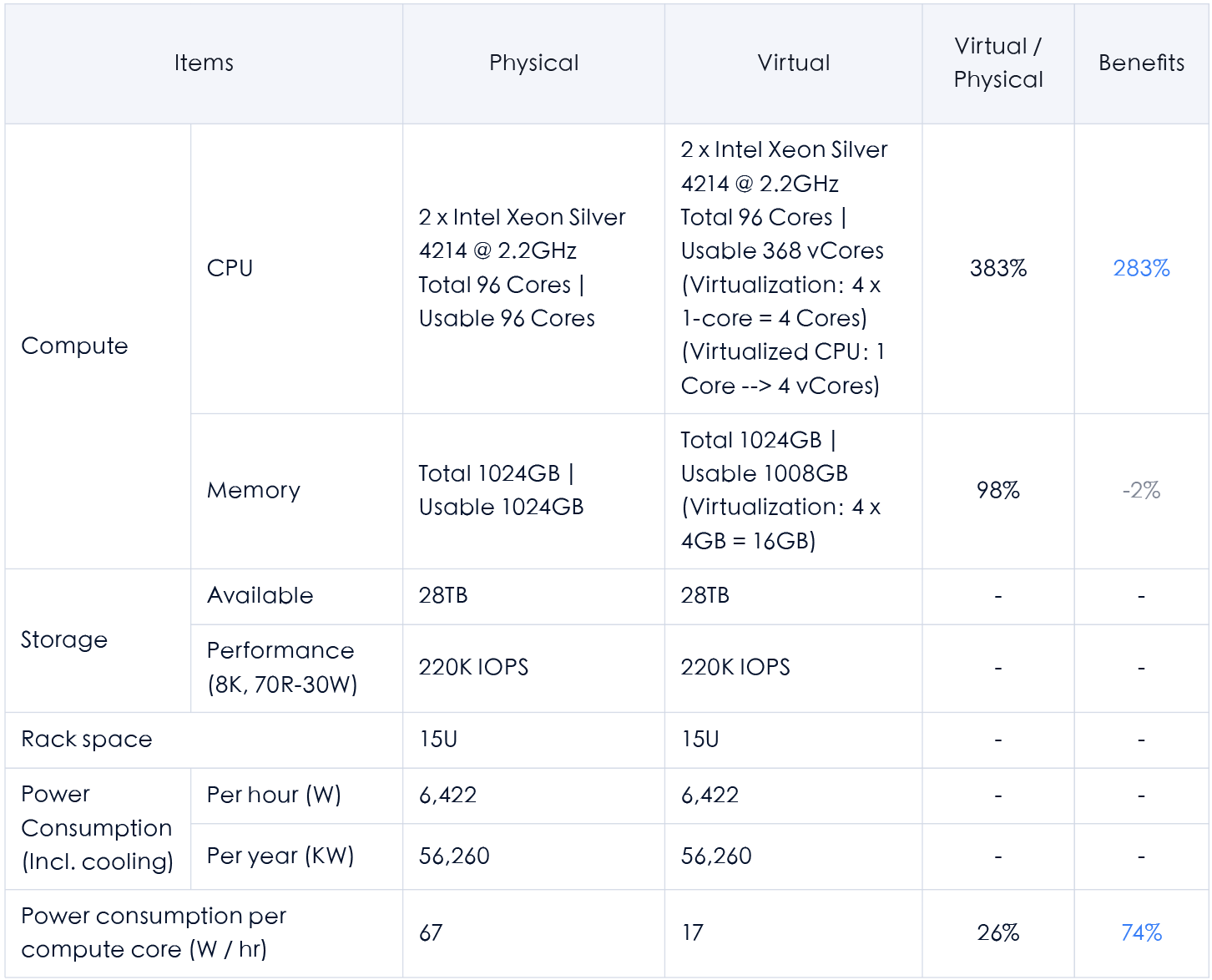

Compute virtualization: 20 to 80% energy saving

Compute virtualization is the key to improving energy efficiency from the IT infrastructure level. It enables IT infrastructure to leap from physical architecture to virtualization. By reducing the number of physical servers and increasing the utilization of IT resources, it allows data centers to use less infrastructure to support larger workloads.

According to IDC, the more densely virtualized the compute, storage, and networking infrastructure layers in data centers, the lower their carbon impact.

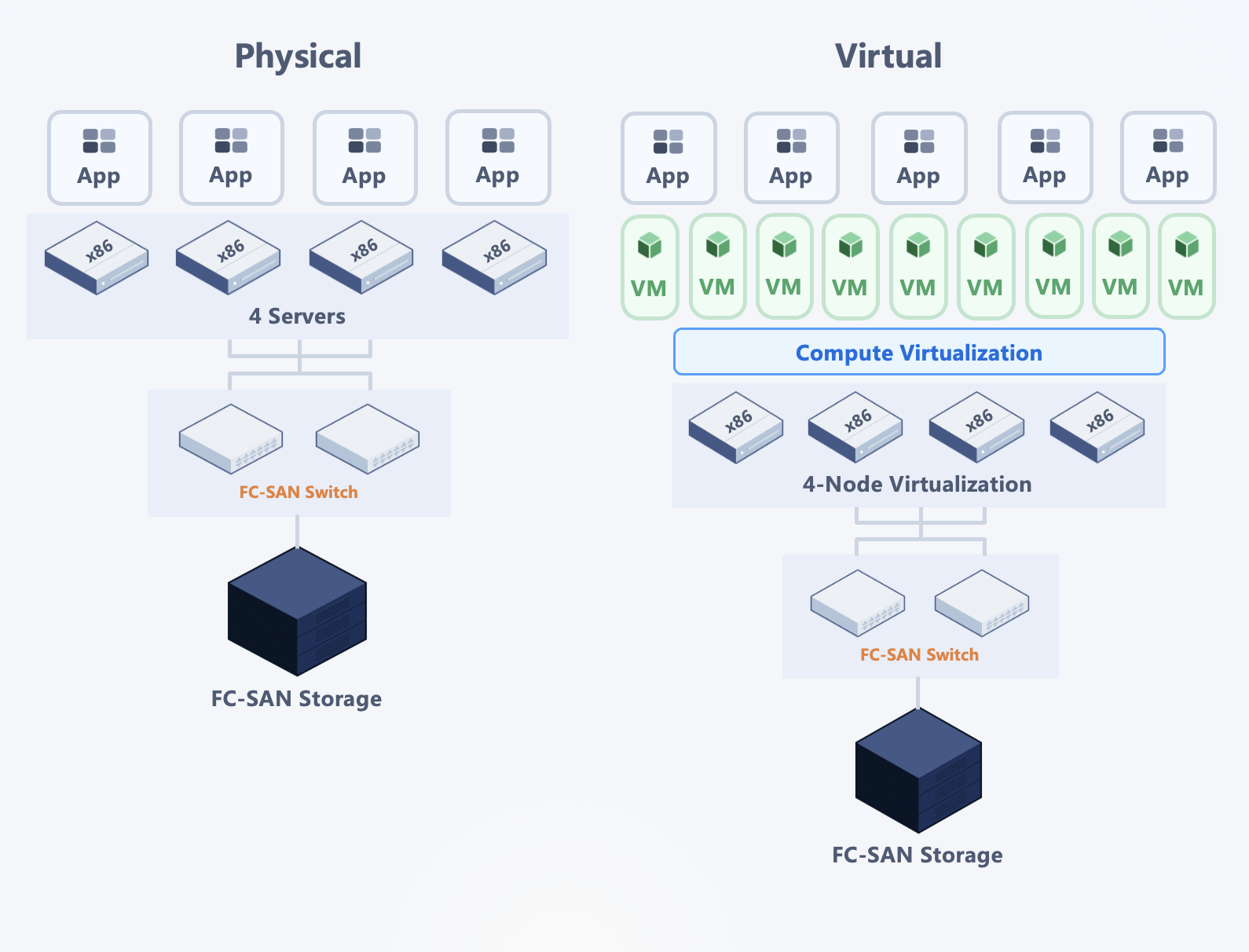

Taking the configuration of 4 physical servers and 1 storage system as an example, About 20–80% energy can be saved by replacing physical machines with virtualization (depending on the density of VM deployment).

Traditional Physical Environment Vs. Traditional Virtual Environment

The biggest difference between the two architectures in this scenario lies in different utilization of compute resources: The higher the utilization of compute resources, the greater the energy saving advantages for the same hardware conditions. The virtualized architecture reduces the average energy consumption per compute core by about 74% through a high utilization of CPU resources (the preset CPU allocation ratio in this scenario is 1:4, which is typically used for medium to heavy compute demands).

In a real-world scenario, the density of VM deployment also will impact the energy saving effect:

- In high-density VM scenarios (1:20, 1 physical server supporting 20 VMs), the average energy consumption of each server (VM) is 321 Wh, which is about 80% lower than that of physical servers.

- In low-density VM scenarios (1:5, 1 physical server supporting 5 VMs), the average energy consumption of each server (VM) is 1,284 Wh, which is about 20% lower than that of physical servers.

If the CPU allocation ratio is further increased, the energy consumption gap between physical and virtual environments will be even larger.

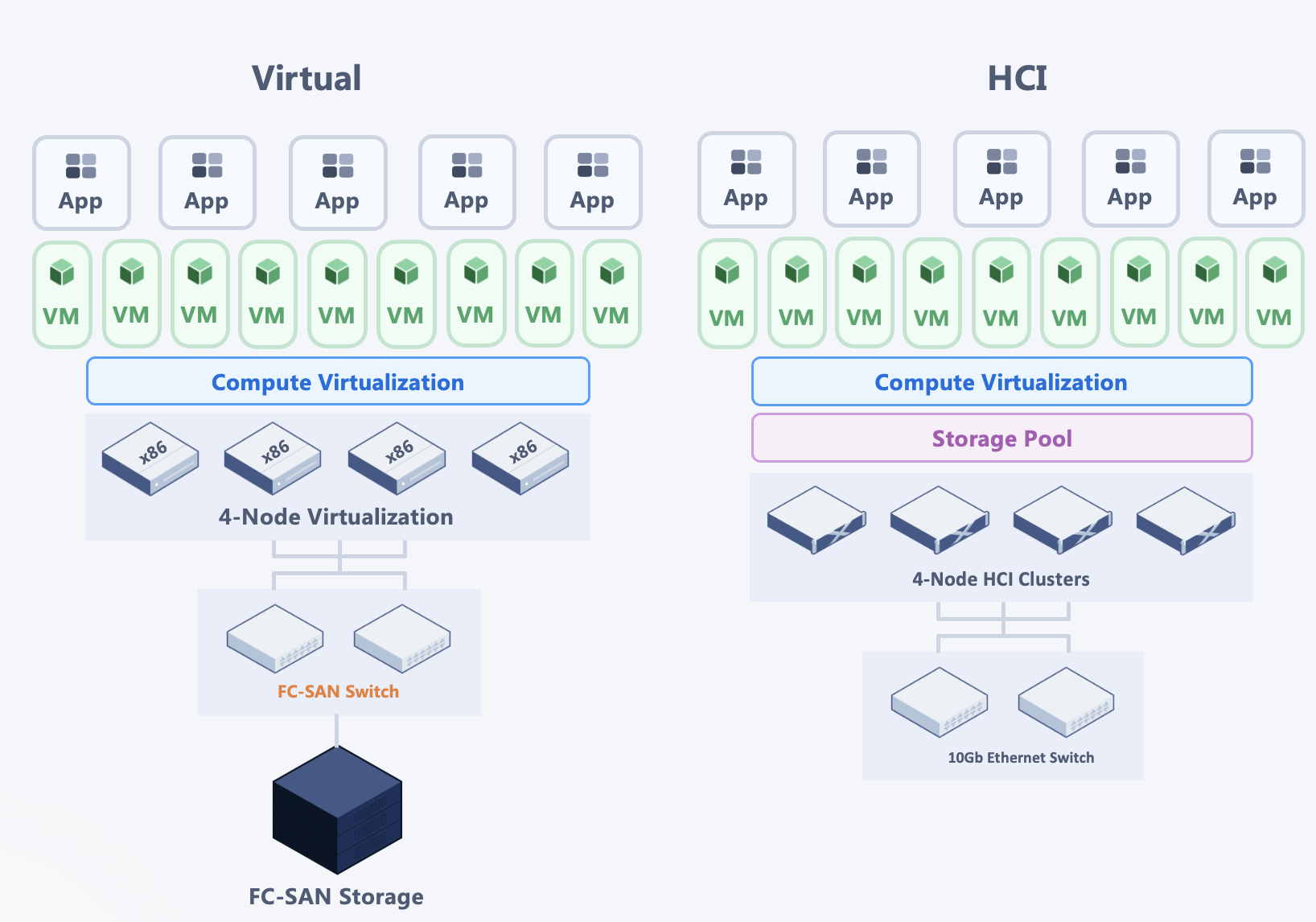

Converged storage and compute: 31% more energy saving

HCI converges compute and storage modules into the same physical server, completely abandoning the need for traditional centralized storage and further saving energy for data centers in addition to virtualization’s energy consumption reduction.

With the same hardware configuration (4 physical servers and 1 storage system), HCI can further reduce the average energy consumption per compute core by about 31% by removing the traditional centralized storage hardware.

Traditional Virtual Environment Vs. HCI

Without the impact of centralized storage devices, HCI shows a significant increase in storage capacity and a notable decrease in average energy consumption per compute core.

The above scenario consists of 4 physical servers plus 1 storage system. By only increasing the number of physical servers, the energy consumption of the two architectures will tend to be similar. Nevertheless, an increase in compute resources (physical servers) usually means an increase in demand for storage resources (performance and capacity).

That’s why in terms of actual deployment scenarios, the overall energy consumption of traditional virtualized architectures with increased compute and storage resources is still quite different from that of HCI.

SmartX provides customers with flexible solutions, offering software-only or the all-in-one appliance. This means that clients can deploy IT infrastructure based on the software SMTX OS, with compute virtualization ELF and distributed storage ZBS components built in, allowing flexible connection with VMware vSphere and Citrix XenServer virtualization services, supporting standard commercial servers, to reduce hardware procurement and occupancy of resources. On the other hand, they can also directly use the Halo P series with SMTX OS pre-loaded, occupying less rack space.

Conclusion

Enterprises play a vital role in reducing carbon impact. It is an important mission for technology enterprises to utilize their influence and technology power to boost carbon neutrality.

Currently, data centers only consume a small amount of the whole society’s electricity use, but the numbers are still growing. For each enterprise client, data centers’ carbon impact accounts for a significant portion in the overall carbon footprint of the company.

While taking several non-IT infrastructure measures to reduce carbon emissions, enterprises can also utilize IT infrastructure by using innovative architectures to accelerate carbon emission reduction of data centers.

SmartX is committed to building innovative enterprise cloud infrastructures for businesses. Driven by customer obsession and the idea of tech for good, we are also helping our clients reduce carbon impact of their data centers with leading virtualized and HCI products and solutions.

References

- IPCC report: ‘Code red’ for human driven global heating, warns UN chief

https://news.un.org/en/story/2021/08/1097362 - Infrastructure Virtualization Leads the Way in Reducing the Carbon Cost of Growthhttps://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/company/vmware-infrastructure-virtualization-leads-the-way-in-reducing-the-carbon-cost-of-growth.pdf