As far as we know, the ultimate performance of a distributed storage cluster is physically decided by its hardware configuration. However, if unexpectable problems such as hard disk and node failures happen and trigger the data recovery during business rush hours, users may find themselves facing a hard choice: which should be the priority, accelerating data recovery or optimizing application performance?

To solve this problem, SmartX introduces the automatic data recovery policy to distributed block storage ZBS. Notably, both SMTX OS (SmartX’s core HCI software) and SMTX ZBS (SmartX’s distributed block storage product) include ZBS.

In this article, we explain how the automatic data recovery policy balances the I/O performance between data recovery and application processing.

Our Solutions

Version 3.0 / 3.5

We prevented servers from overloading and slowing mission-critical applications by limiting the speed of data recovery to maximally 100 MiB/s and migration to maximally 40 MiB/s per node.

Version 4.0

Since SMTX OS version 4.0, we have introduced automatic data recovery/migration policy. We provide two modes and enable users to conveniently customize recovery/migration speed.

- AUTO (default mode): In this mode, the server prioritizes application performance and automatically adjusts the speed of data recovery/migration according to each node’s application workload.

- STATIC: In this mode, users could customize the cluster’s highest speed of data recovery. If users prefer an optimized application performance, recovery speed can be set lower, for example, at 40 MiB/s, and vice versa. Once setting, this mode will be applied to all nodes in the cluster.

Notably, the recovery speed ranges from 1 MiB/s to 500 MiB/s, with a default setting at 100 MiB/s.

Version 5.0.0

We refined the data recovery policy and took each node’s hardware configuration into account. This is to say, with the updated strategy, the nodes adaptively set the speed limit for data recovery/migration according to the capabilities of the storage network (10 GbE/25 GbE/if RDMA is enabled) and storage media (SATA HDD/SATA SSD/NVMe SSD).

Latest version

To fully leverage hardware’s data processing capabilities, SmartX further refined the strategy and increased the number of recovery commands that the storage system processes at a time by 28%. This is applied to the following versions:

- SMTX OS 4.0.12 and above

- SMTX OS 5.0.3 and above

- SMTX ZBS 5.1.0 and above

Application I/O vs. Recovery/Migration I/O

In SmartX distributed storage, we divide I/O as application I/O and recovery/migration I/O. SmartX independently-developed storage engine can automatically monitor application I/O and data recovery/migration I/O and prioritize application performance. This is accomplished every 4 seconds. Whether business I/O is idle or busy is determined by the comparison between IOPS and BW of the business I/O and the preset threshold.

- If application I/O’s IOPS or BW >= threshold value, the speed of data recovery/migration will be limited to the default value to safeguard application performance.

- If application I/O’s IOPS and BW < threshold value and the recovery/migration speed exceeds 80% of the threshold value, the system will automatically raise threshold value (by 50% per time until reaching the ceiling of speed) to accelerate data recovery.

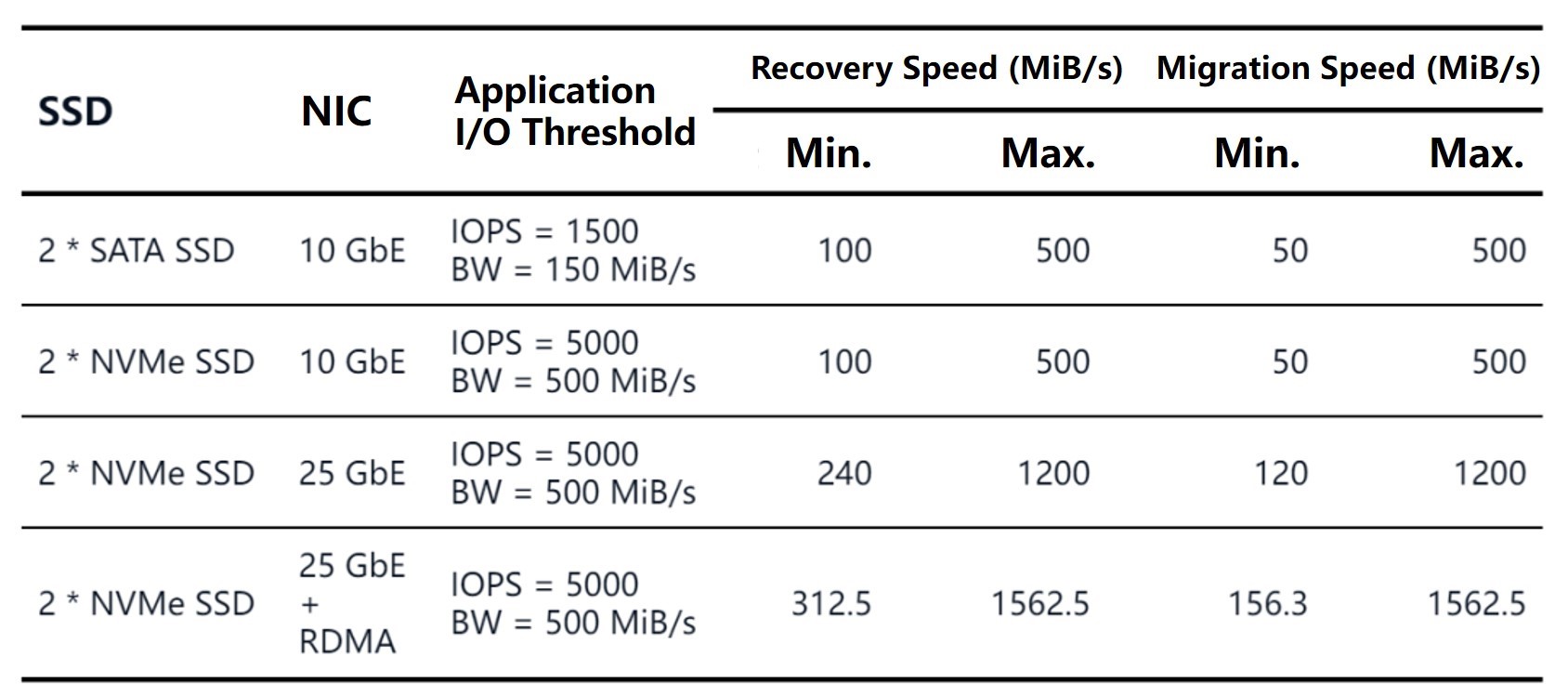

Take several common hardware configurations as examples. The speed range of data recovery/migration and application I/O threshold value are as follows:

Proof of concept

We further examined the efficacy of the automatic recovery strategy. The test was implemented on a HCI cluster consisting of 3 servers, triggering data recovery by shutting down one of the nodes and running FIO inside the virtual machine to simulate application I/O.

Testing environment

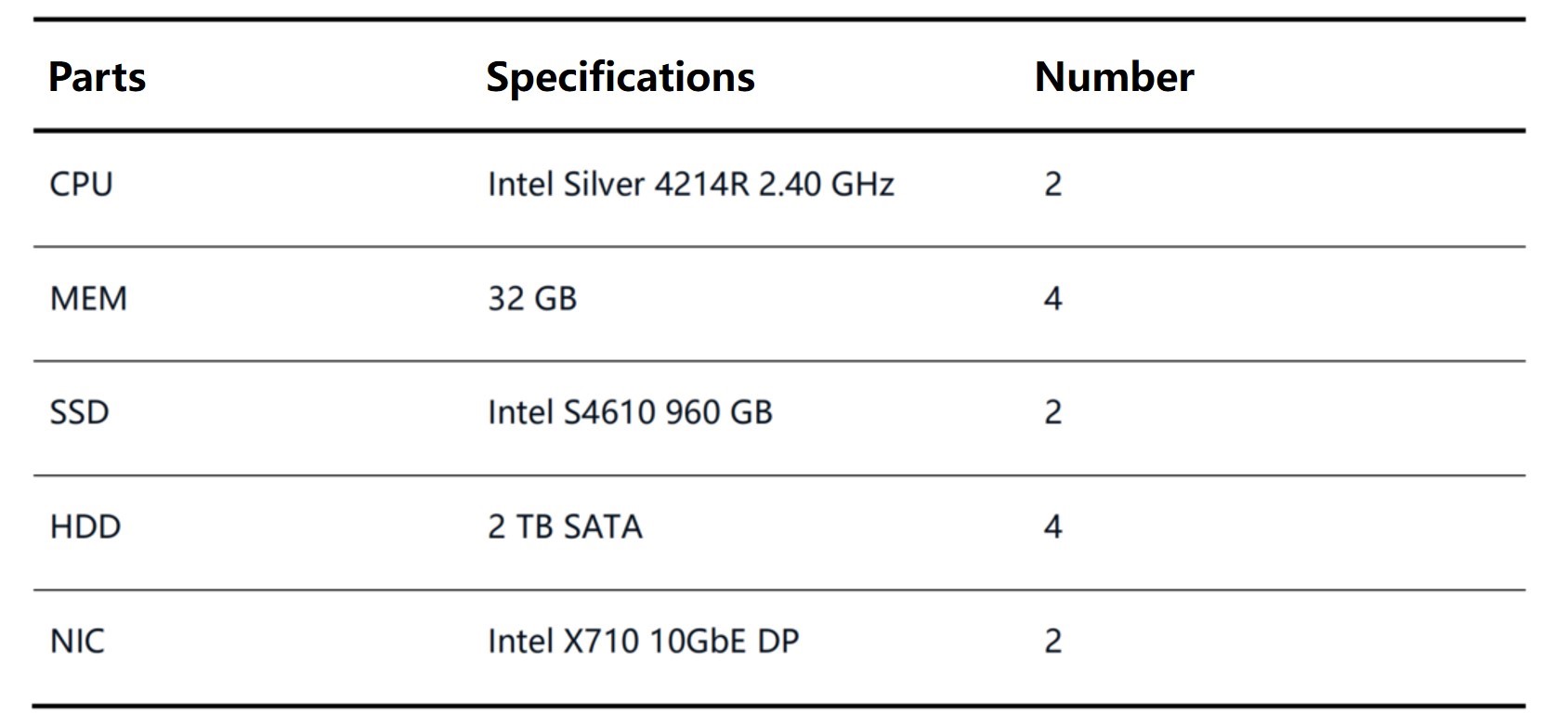

Hardware configuration

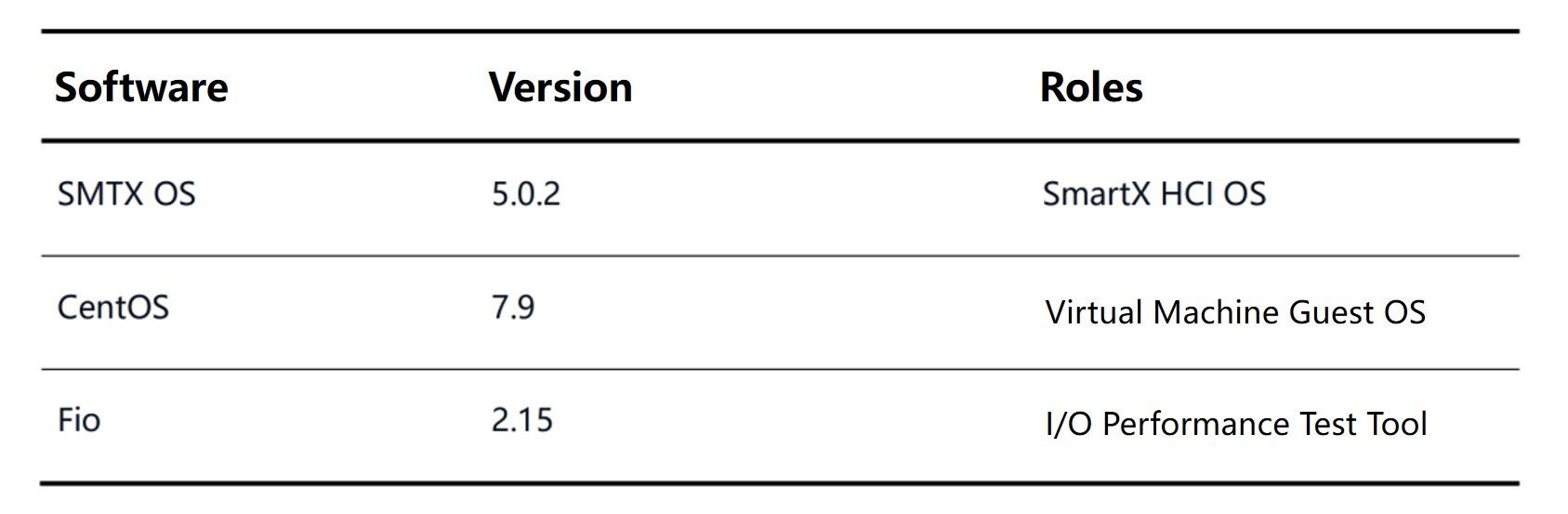

Software version

Testing network

10 GbE network, with RDMA disabled.

Procedure

1. Located the virtual machine vm-01 on the first server node-01.

2. Shut down the third server node-03 to generate a large demand for data recovery.

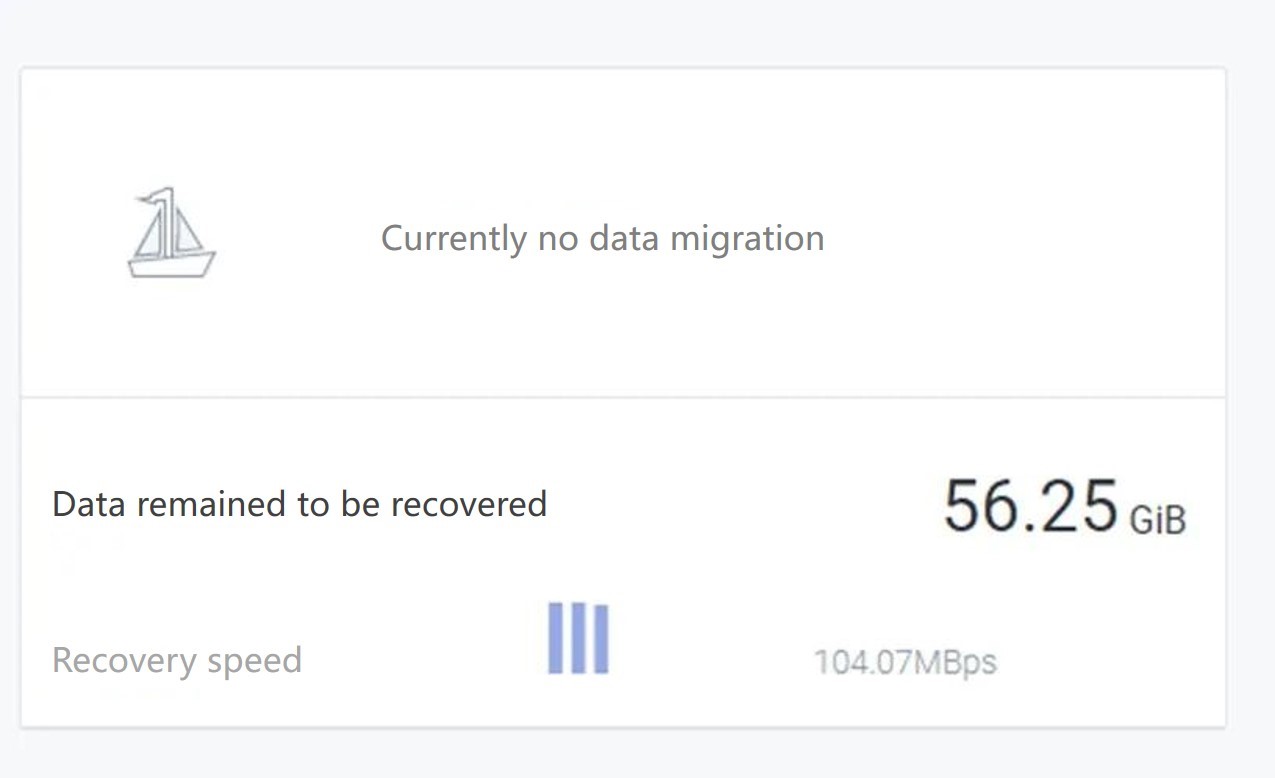

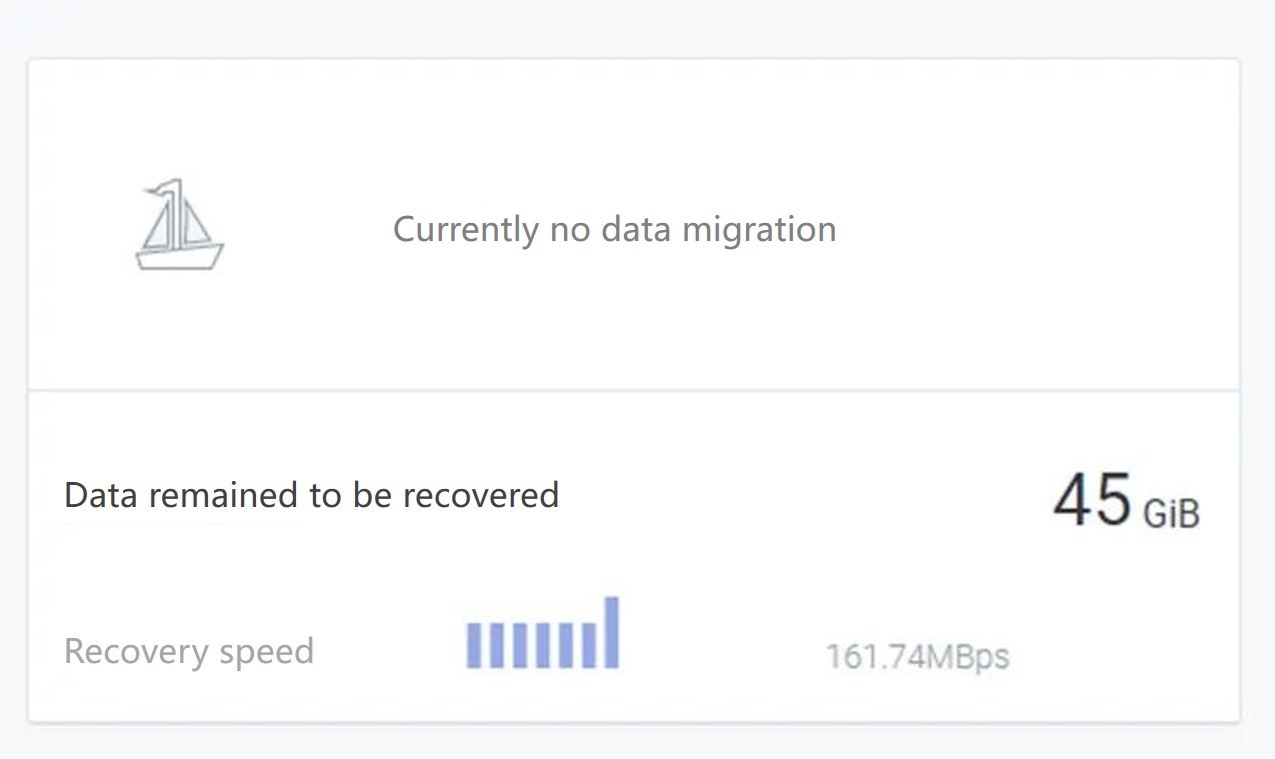

3. It had been observed that the data recovery speed of node-01 was around 500 MiB/s.

4. Ran the following commands in the virtual machine vm-01 to simulate application I/O:

| fio –ioengine=libaio –invalidate=1 –rw=randwrite –iodepth=128 –direct=1 –size=100g –name=smtx-fio –bs=256k –filename=/dev/vdb –time_based –runtime=3600 |

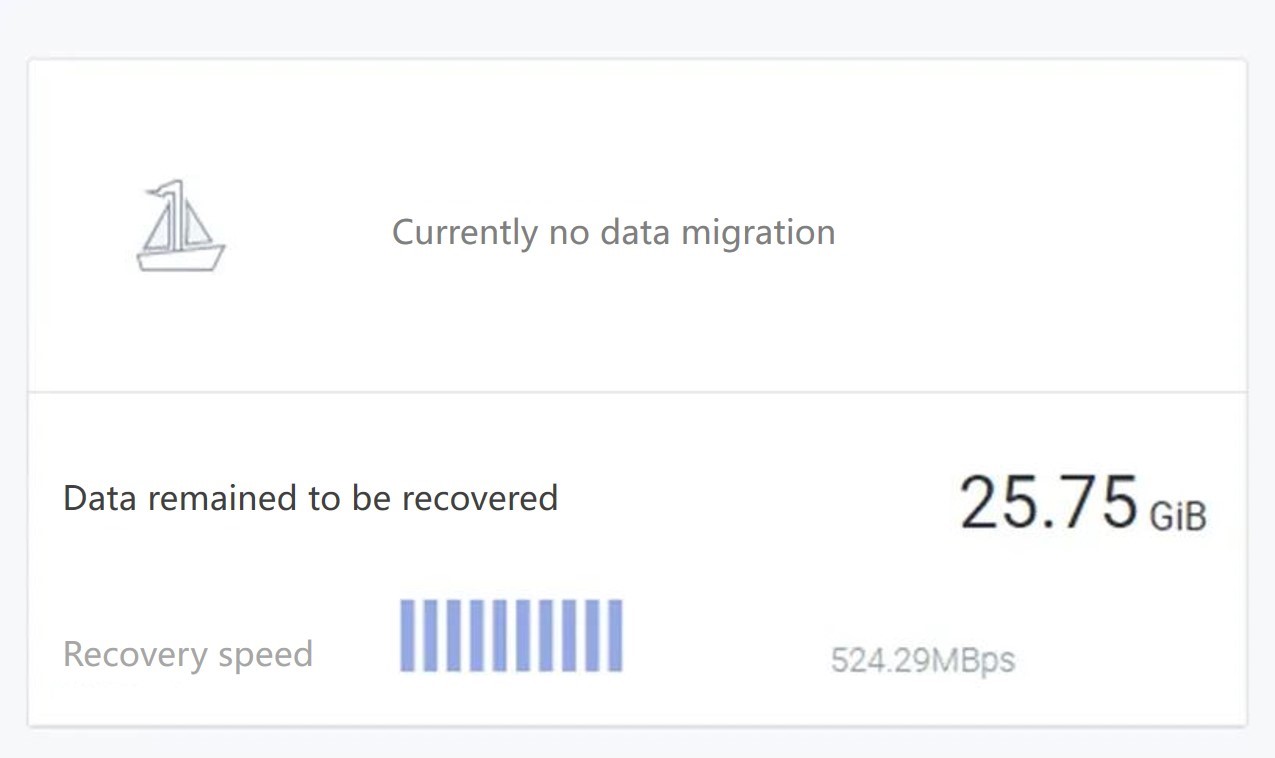

5. The data recovery speed of node-01 decreased to below 100 MiB/s.

6. Stopped the FIO order in vm-01, and the data recovery speed of node-01 gradually increased from 100 MiB/s to 500 MiB/s.

Conclusion

In short, during the data recovery, SmartX’s automatic data recovery policy would prioritize application’s I/O performance and elastically adjust the speed of data recovery/migration to avoid I/O overhead. The ultimate goal is to optimize data recovery efficiency on the premise of stable application performance.

Preventing SmartX customers from potential business threats and O&M complexity have always been our long-term ambitions. Besides the automatic data recovery/migration policy, we also use intelligent disk exception handling mechanisms, such as unhealthy and sub-healthy disk detection, to automatically handle hardware failures, ensuring business stability and simplifying O&M.