In this article, we focus on SPDK Vhost-user and explain how to leverage this technology to improve the I/O performance of Virtio-BLK/Virtio-SCSI storage in KVM. In particular, we tested vhost-user’s performance on SMTX OS, the SmartX HCI core software.

Currently, there are two mainstream I/O device virtualization solutions:

- QEMU – pure software emulation: Provide pure-software-simulated I/O devices to VMs.

- Virtio – paravirtualization: In this scheme, the front-end and back-end are standardized to an official Virtio specification. The front-end driver (Virtio driver) is used in the VM (Guest OS) while the back-end device (Virtio device) is used in the hypervisor (QEMU) to provide I/O capabilities. I/O performance is improved by reducing the number of memory copies and VM traps. This scheme requires the installation of the Virtio driver.

Vhost, basically, is a technology derived from Virtio standard to optimize I/O paths and improve the I/O performance of paravirtual Virtio devices in QEMU.

Introduction of Virtio

Benefitting from Vring’s I/O communication mechanism, Virtio effectively reduces I/O latency and shows better performance than the pure software emulation of QEMU. This is why Virtio becomes a prevalent paravirtualization solution of I/O devices across vendors.

In QEMU, the Virtio device is a PCI/PCIe device emulated for the guest operating system, which complies with PCI standard and features configuration space and interrupt functions. Notably, Virtio registered the PCI vendor ID (0x1AF4) and device IDs. Each device ID represents a device type. For example, the device ID for storage Virtio-BLK is 0x1001 and Virtio-SCSI is 0x1004.

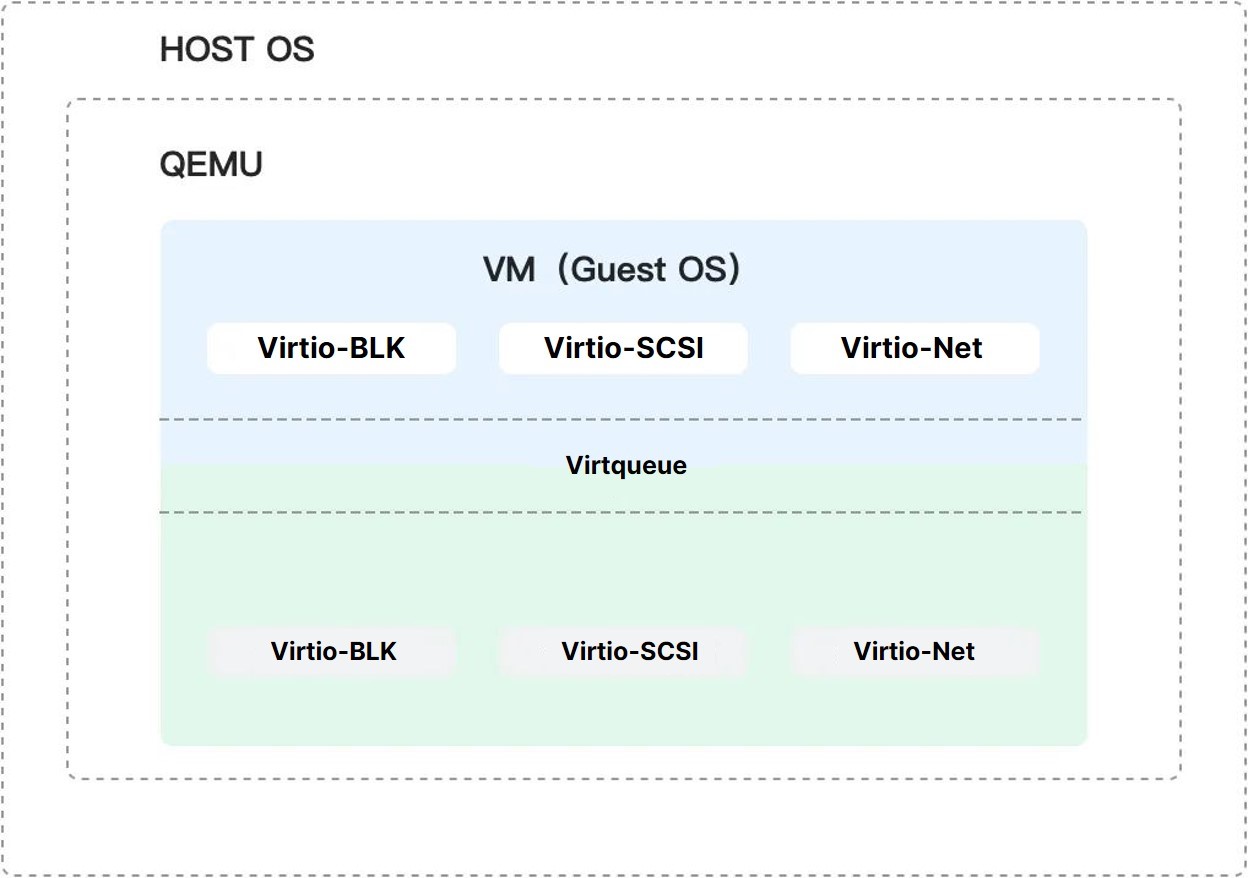

Virtio consists of three parts: 1) the front-end driver layer, which is integrated in the Guest OS; 2) Virtqueue as the middle part, which is responsible for data transmission and command interaction; and 3) the back-end device layer, which processes the requests sent by the Guest OS.

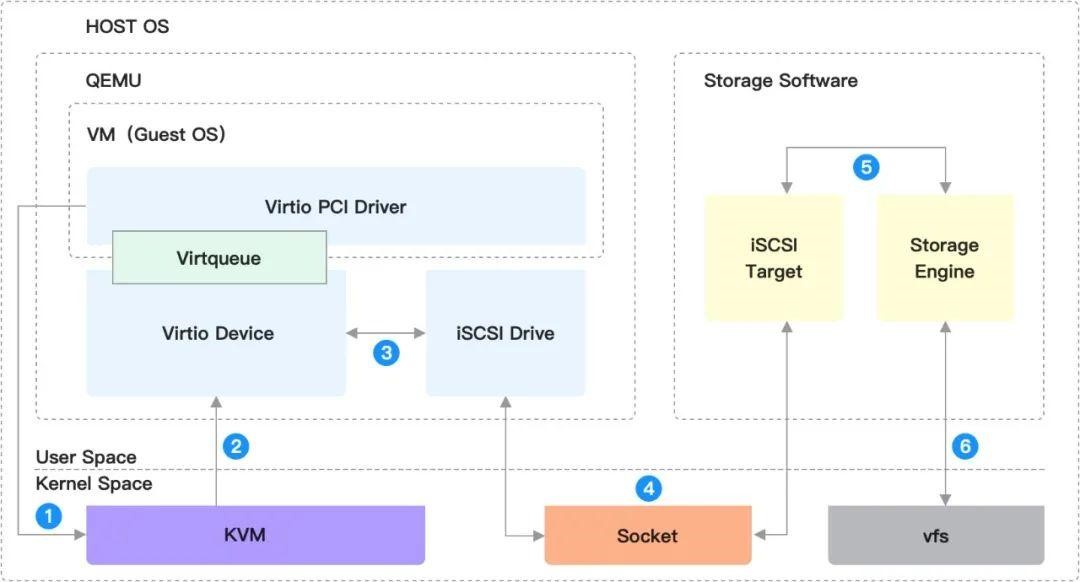

In the hyperconverged architecture (HCI), the data path based on Virtio-BLK is as follows:

- Guest Initiates an I/O operation. The Virtio driver (Guest’s kernel) writes to the PCI configuration space to trigger VM EXIT and returns to the Host KVM (to notify the KVM);

- The QEMU’s vCPU thread returns to QEMU from the KVM kernel mode. Then QEMU device processes the request from Virtio Vring;

- QEMU initiates storage connection (iSCSI over TCP to localhost) through iSCSI Drive;

- The request is connected to the iSCSI Target provided by the storage process through the Socket;

- The storage engine receives the request and performs I/O processing;

- The storage engine initiates the I/O processing to the local storage media;

- After the I/O operation is complete, it is returned to the Virtio back-end device through the same path. Then QEMU sends an interrupt notification to the simulated PCI and Guest terminates the entire I/O process.

However, this process lacks efficiency. As QEMU connects the storage process through local Sockets, dataflows switch between user mode to kernel mode, causing data replication overhead. Besides, the iSCSI protocol layer also causes unnecessary processing consumption. If the storage process can directly receive and process local I/O, the performance reduction can be avoided and realize the implementation of Virtio Offload to Storage Software.

Vhost acceleration

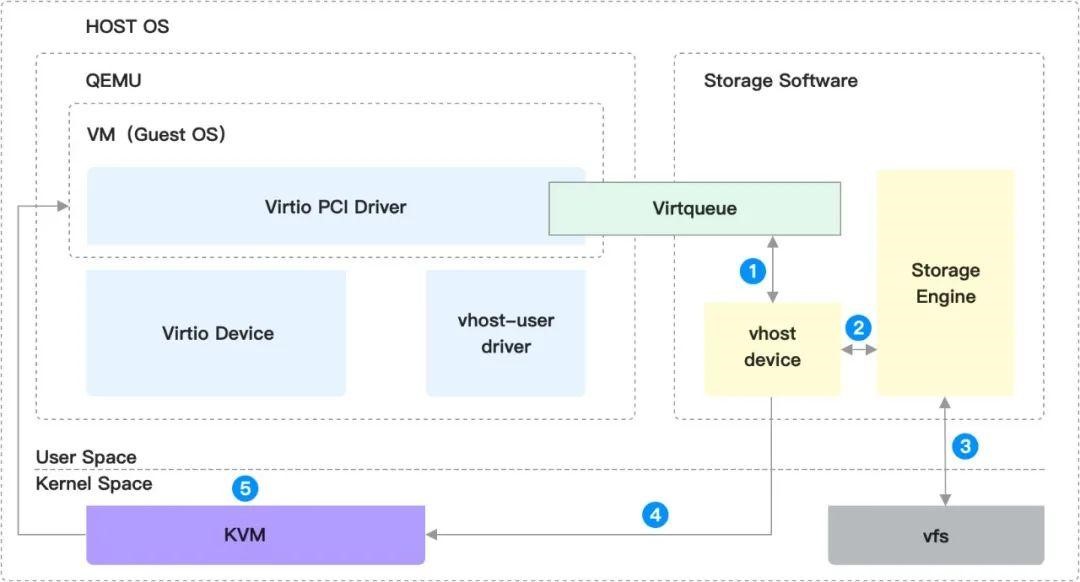

As mentioned above, the Virtio back-end device is used to handle Guest requests and I/O responses. The solution that puts the I/O processing module outside the QEMU process is called vhost. Since we aim to optimize the performance between two user-mode processes (HCI), we use vhost-user scheme for storage acceleration. As in the vhost-kernel scheme, I/O load is processed in the kernel, we do not discuss this scheme in this article.

The data plane processing of vhost-user is mainly divided into Master and Slave. Generally, QEMU is the Master which is the supplier of Virtqueue, and storage software is the Slave which is responsible for consuming I/O requests in the Virtqueue.

Advantages of the vhost-user scheme include:

- Eliminate the space for Guest kernel updating the PCI configuration, and the CPU context overhead caused by QEMU capturing the Guest’s VM traps (back-end processing threads poll all Virtqueues).

- User-mode memory sharing between processes optimizes data replication efficiency (zero copy).

And the I/O processing process is as follows:

- After the Guest initiates an I/O operation, the storage software detects new requests through the Polling mechanism and obtains data from the Virtqueue;

- The storage engine receives requests and performs I/O processing;

- The storage engine initiates the I/O processing to the local storage media;

- After the I/O operation is complete, the vhost device sends an irqfd (eventfd) notification to KVM;

- KVM interrupts data injection and notifies Guest OS to terminate I/O processing.

Note: Control information is transmitted between front-end vhost driver and back-end vhost device through the UNIX Domain Socket files.

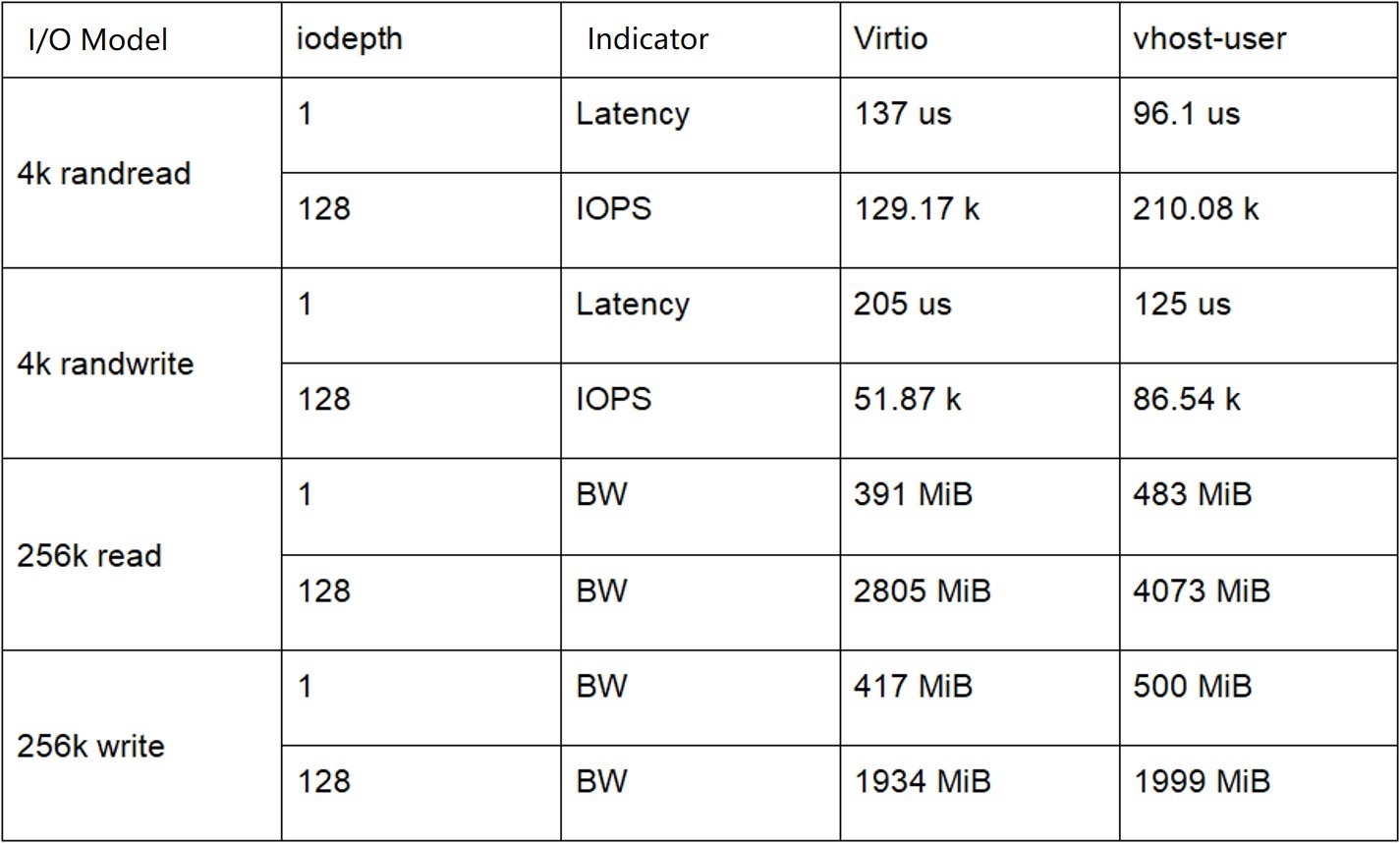

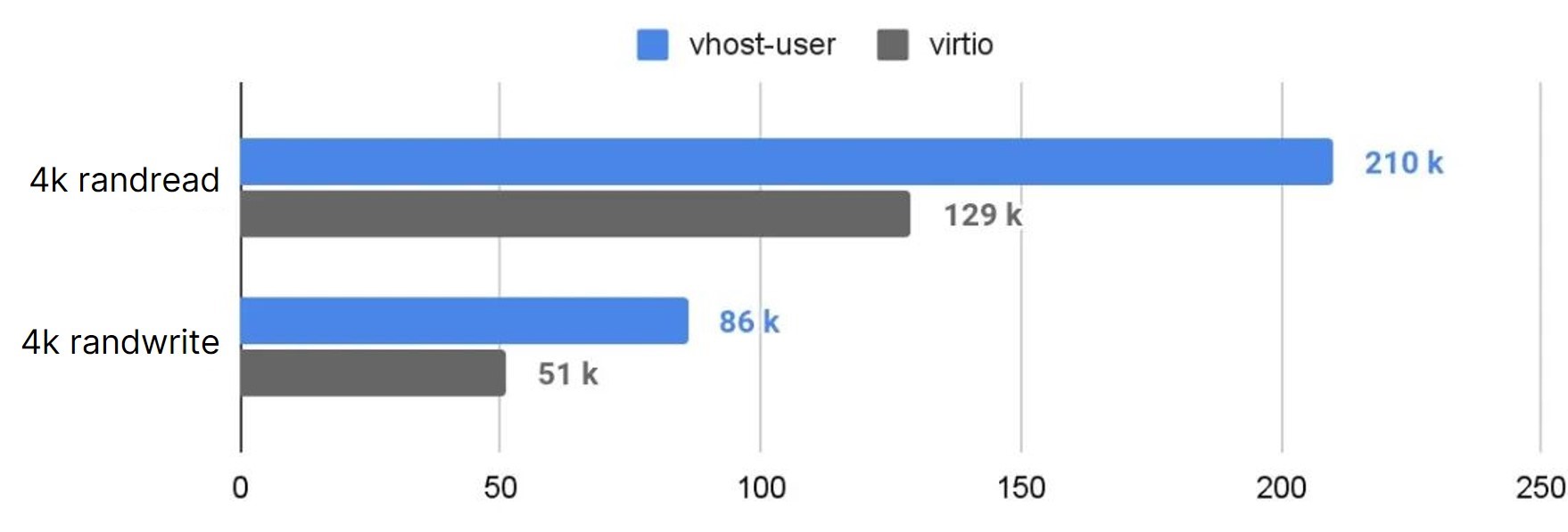

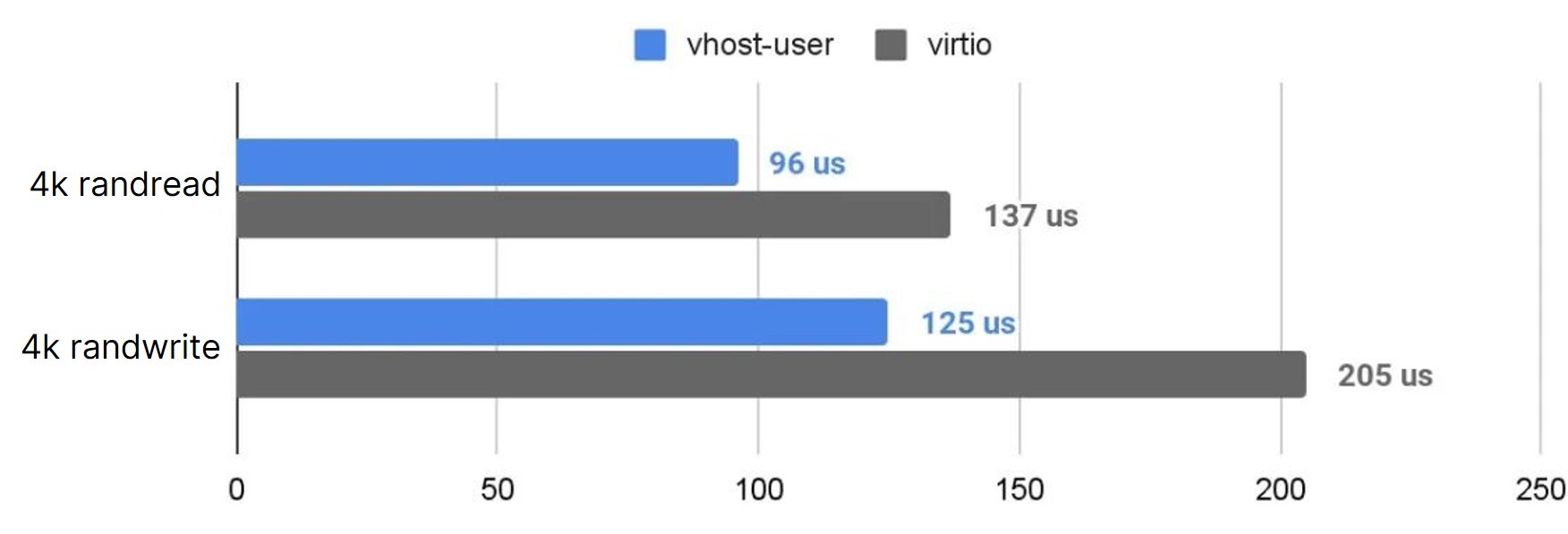

We also compared Virtio’s and vhost-user’s single-node storage performance and got results as follows:

The test data is based on the three-nodes HCI cluster (the storage network of the cluster was not enabled with RDMA). The hardware configuration is as follows:

- Intel Xeon Gold 5122 3.6GHz

- 8*16G DDR4 2666MHz

- 2*Intel P4610 1.6T NVMe

- Mellanox CX-4 25Gbps

The following table compares two schemes’ IOPS under the condition of 4k iodepth = 128 (higher IOPS stands for better performance):

The following table compares two schemes’ latency under the condition of 4K iodepth=1 (lower latency stands for better performance):

Conclusion

Through the comparison, it is clear that the vhost technology presents more outstanding storage performance than the Virtio solution. Moreover, as an enterprise-level product, it should be reliable in the production environment. For example, it should support scenarios such as vhost reconnection/switching under abnormal storage software, and hot scale-out of virtual machine memory.