Presently, ZBS uses RDMA (remote direct memory access) in two areas: the storage access network and the data synchronization network of internal storage. We have explored access protocols in the previous blog Exploring the Architecture of ZBS – How NVMe-oF Boosts Storage Performance. In this article, we will focus on the second scenario, and disclose more details on the implementation of RDMA in ZBS (Actually, ZBS’s supportive capability of RDMA was initially attained through the data synchronization design). We will also validate the performance enhancement brought by RDMA through the test.

ZBS Storage Data Synchronization

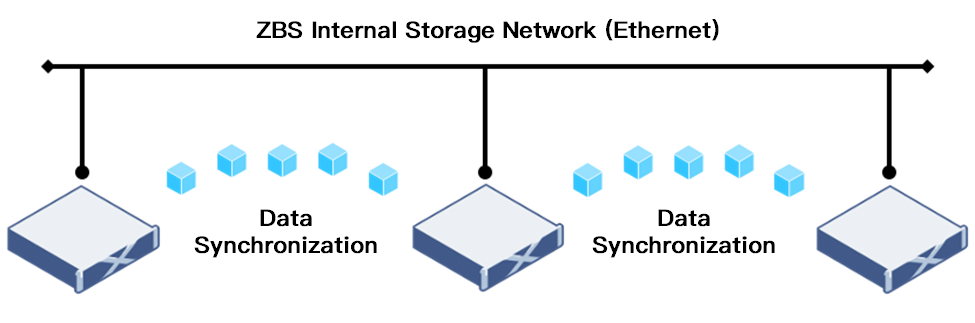

Distributed storage and centralized storage mainly differ in architecture implementation. To ensure the consistency and reliability of data storage, the distributed architecture heavily relies on the network to achieve data synchronization. For instance, in a 3-node (A/B/C) ZBS storage cluster, the data are stored as two replicas (with 2 copies located in different physical nodes). Assume that the data is stored in Node A and Node B respectively, once changes are made to the data, ZBS has to synchronize the changes to Node A and Node B before returning with “confirmation”. This process of data synchronization is achieved through the storage network.

Accordingly, the efficiency of data synchronization has a significant impact on the performance of distributed storage. As this mechanism remains quite a room for improvement, we’ll go over it in more detail below.

For regular workload requirements, a ZBS storage network is typically configured with a 10Gb Ethernet switch and server network card, with standard TCP/IP as the network transmission protocol. This configuration, however, limits the performance of internal storage data synchronization for workloads that require high bandwidth and low latency. To meet these requirements while fully leveraging the robust I/O performance of high-speed storage media (e.g., NVMe disk), RDMA technology combined with a 25GbE or higher-speed network may be preferable.

TCP/IP

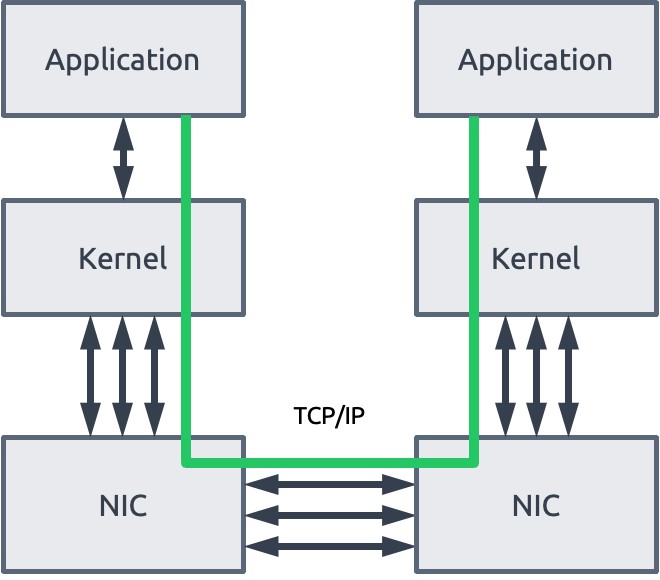

The main difference between distributed storage and traditional storage is that it is software-defined and runs on common standard hardware. ZBS has long used the TCP/IP network protocol stack for internal storage communication because it is highly compatible with existing Ethernet and can meet major customers’ workload requirements. TCP/IP network communication, on the other hand, gradually declines to support high-performance computation for two reasons:

- Latency caused by TCP/IP protocol stack processing

When the TCP protocol stack receives/sends data messages, the system kernel has to switch context multiple times. Furthermore, the data transmission requires multiple data duplication and is dependent on CPU for protocol packaging, resulting in tens of µs latency.

- Higher CPU consumption caused by TCP protocol stack processing

Aside from latency, the TCP/IP network necessitates the server CPU participate in stack memory duplication multiple times. As the distributed storage network grows in size and network bandwidth requirements rise, CPU processing pressure increases. This will result in greater use of CPU resources, which is particularly detrimental to the hyperconverged architecture.

TCP/IP Socket Communication

RDMA

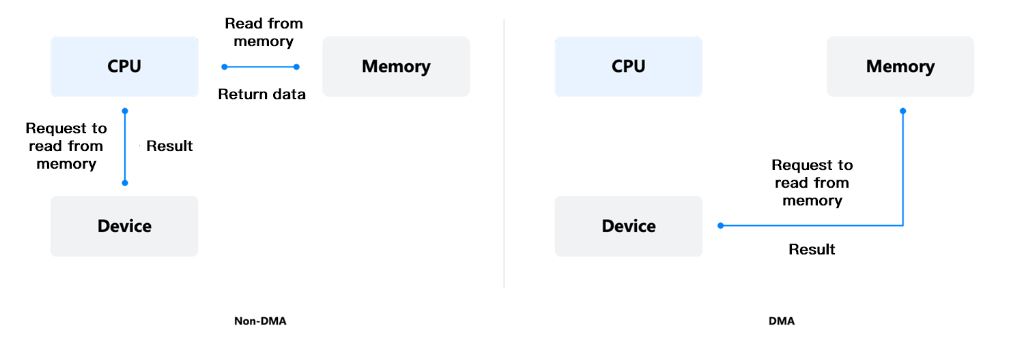

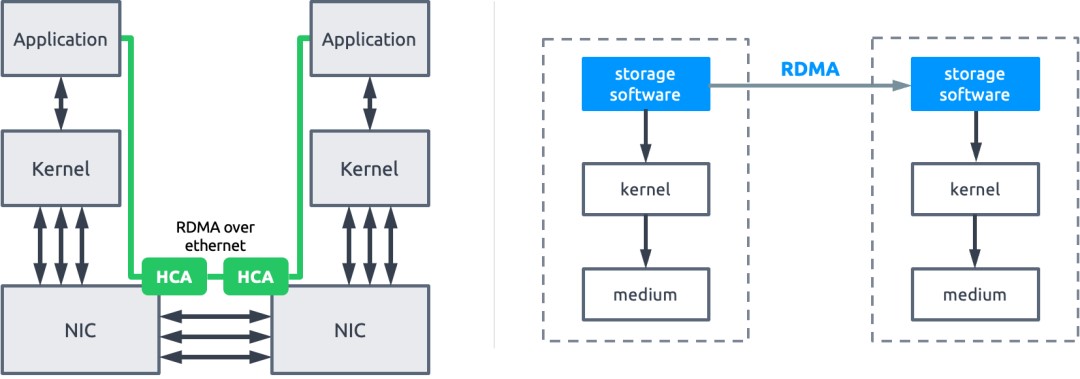

RDMA is an abbreviation for Remote Direct Memory Access, where DMA refers to the technology by which the device reads from/writes to the memory directly (without CPU involvement). It provides an effective way to reduce TCP/IP network transmission latency and CPU resource consumption by allowing the data to move quickly into the memory of a remote system without passing by the kernel network protocol stack and CPU.

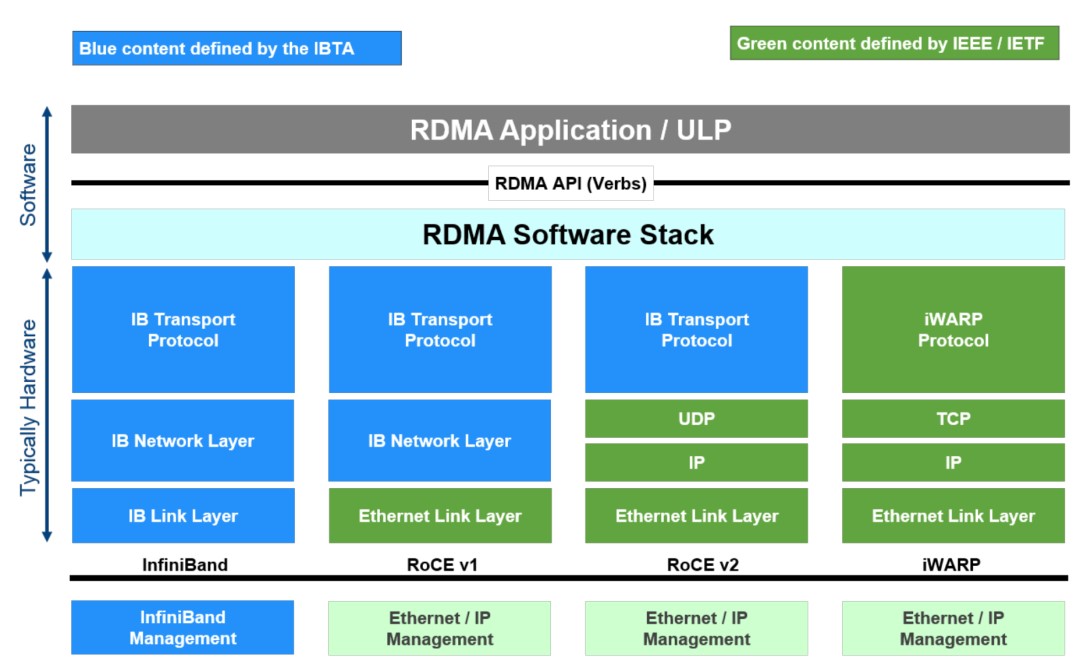

Currently, there are three ways to implement RDMA:

Implementation of RDMA (Resource: SNIA)

InfiniBand (IB) is a full-stack architecture that offers a comprehensive suite of RDMA solutions including a programming interface, Layer 2 to 4 protocol, network card interface and switches. The InfiniBand programming interface is also the de facto standard of RDMA. It also supports the programming of both RoCE and iWARP.

RoCE (RDMA over Converged Ethernet) and iWARP (while it is generally described as Internet Wide Area RDMA Protocol, it is not accurate according to RDMA Consortium’s explanation that iWARP is not an abbreviation) are implemented by packaging the programming interface of InfiniBand into the Ethernet for transmission. RoCE is available in two versions. RoCEv1 contains the network layer and transmission layer protocols. However, it does not support routing, making it more of a transition protocol with limited application. RoCEv2 is based on the UDP/IP protocol and possesses routing capability. iWARP is built upon the TCP protocol.

Both Infiniband and RoCE protocols are based on the “Infiniband Architecture Specification,” which is also known as the “IB Specification.” iWARP, on the other hand, adheres to the design of IETF protocol standards. But iWARP incorporates many Infiniband design ideas and currently employs the same set of programming interfaces (Verbs*). As a result, the three protocols are conceptually identical.

* Verb is the abstraction of the network adapter function in the RDMA context. Each Verb is a function representing a function/action of RDMA including sending and receiving data, creating and deleting RDMA objects, etc.

RDMA requires ecosystem support from network card and switch vendors. Major network vendors’ support of protocols are summarized below:

Despite having the best protocol performance and software/hardware closeness, Infiniband is the most expensive and requires replacing the entire devices, including the network card, cables and switches. This is unfriendly to the common standard distributed storage scenarios. Therefore, ZBS foremost crossed Infiniband off the list.

As for the selection of RoCE and iWARP, although RoCE is limited by UDP in data retransmission and congestion control, necessitating lossless network environment support, it shows more potential than iWARP, according to SmartX’s full assessment of the ecosystem, protocol efficiency, complexity, and performance in particular. At present, ZBS leverages RoCEv2 to implement RDMA and the data synchronization network of internal storage.

ZBS RDMA RoCEv2

Performance Validation

We tested the RDMA’s capability by comparing the performance of ZBS with and without RDMA enabled. The consistency of the test environment, including that of hardware configuration, system version and relevant software version, is strictly controlled.

Test Environment

Storage cluster consisted of 3 nodes and used SMTX OS 5.0. RDMA was enabled on the storage internal network. The storage was based on the tiered storage structure. All storage nodes had the same hardware configuration.

Result

We tested the performance of RDMA protocol and TCP/IP protocol under the same test environment and method. In order to better observe the performance of the read/write I/O across the nodes (data locality, which is ZBS’s default feature, can obviously optimize the read I/O model), the test was conducted based on the Data Channel plane (the RPC channel in ZBS for the number sending-receiving requests between the nodes). The test was only used to compare the network performance and the data from I/O read-write operations was not stored.

Performance Comparison

Conclusion

The test result shows that under the same software/hardware environment and test method, RDMA as the internal storage’s data synchronization protocol may yield better I/O performance, which was reflected by higher 4K random IOPS, lower latency as well as the full utilization of 25GbE network bandwidth capabilities for higher data throughput under the 256K sequential read-write scenario.

To Wrap Up

Due to RDMA’s optimization of data communication across networks, it has been used in a wide range of enterprise application scenarios, and in particular, distributed storage. With RDMA, internal storage’s data synchronization becomes more efficient, enhancing storage performance for business applications with higher workload requirements.

Reference

- RDMA over Converged Ethernet. Wikipedia. https://en.wikipedia.org/wiki/RDMA_over_Converged_Ethernet

- How Ethernet RDMA Protocols iWARP and RoCE Support NVMe over Fabrics. https://www.snia.org/sites/default/files/ESF/How_Ethernet_RDMA_Protocols_Support_NVMe_over_Fabrics_Final.pdf