Different HCI software varies in reading and writing mechanisms and I/O paths, which affects their efficiency and overhead. As a result, operators should understand how I/O works in HCI to plan for the deployment (e.g., tailoring network bandwidths for I/O paths).

This article compares the I/O paths of VMware vSAN and SmartX HCI by analyzing read and write I/O under various scenarios and their locality probabilities to explain the effects of the I/O path on storage and cluster performance.

Key Takeaways

- Local reads and writes, with the same hardware and software configurations, should have a shorter response time than remote I/O because network transmission inevitably increases I/O latency and overhead.

- Under the normal state and data recovery scenario, ZBS has a higher probability of local I/O and a theoretically lower latency than vSAN. vSAN, though, performs remote I/O frequently at normal state, it provides better I/O paths for VM migration.

- Excessive remote I/O can also cause issues such as network resource contention cluster-wide.

I/O Path and Performance Impact

As far as we know, the application layer transfers data to a storage medium (usually a disk) for persistent storage through the system’s I/O path. In general, this process involves a variety of hardware devices and software logic, with the overhead and processing time (i.e., latency) increasing as more hardware and software are included. So theoretically, the design of the I/O path has a significant impact on the overall performance of the application.

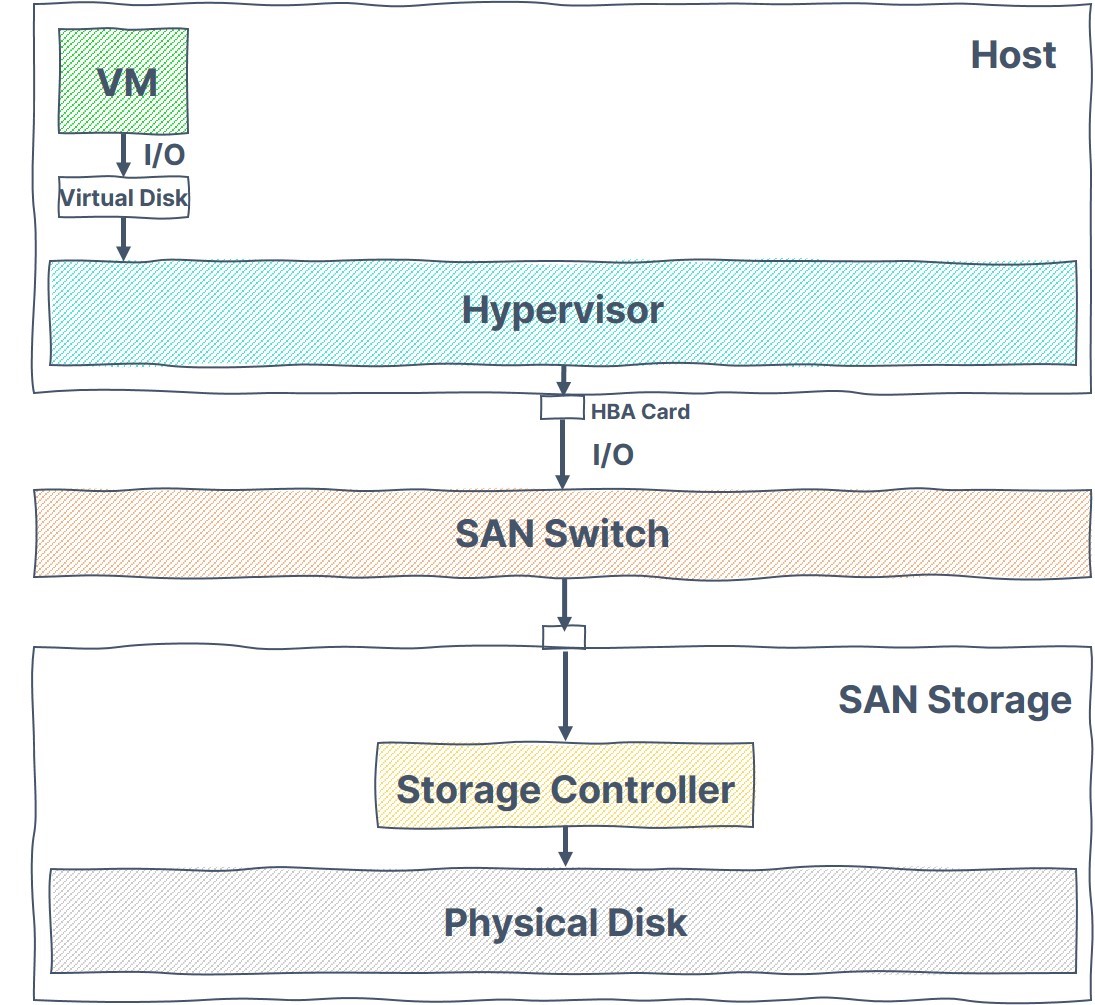

I/O Path in Legacy Virtualization

In a traditional three-tier architecture, writes are routed from a VM through the hypervisor, the host’s FC HBA card, the FC SAN switch, and the SAN storage controller before being stored on a physical disk.

The hardware devices and software logic involved in this process include:

- Hardware Devices: host/SAN switches/SAN storage device…

- Software Logic: Guest OS/virtual disk/virtualization software…

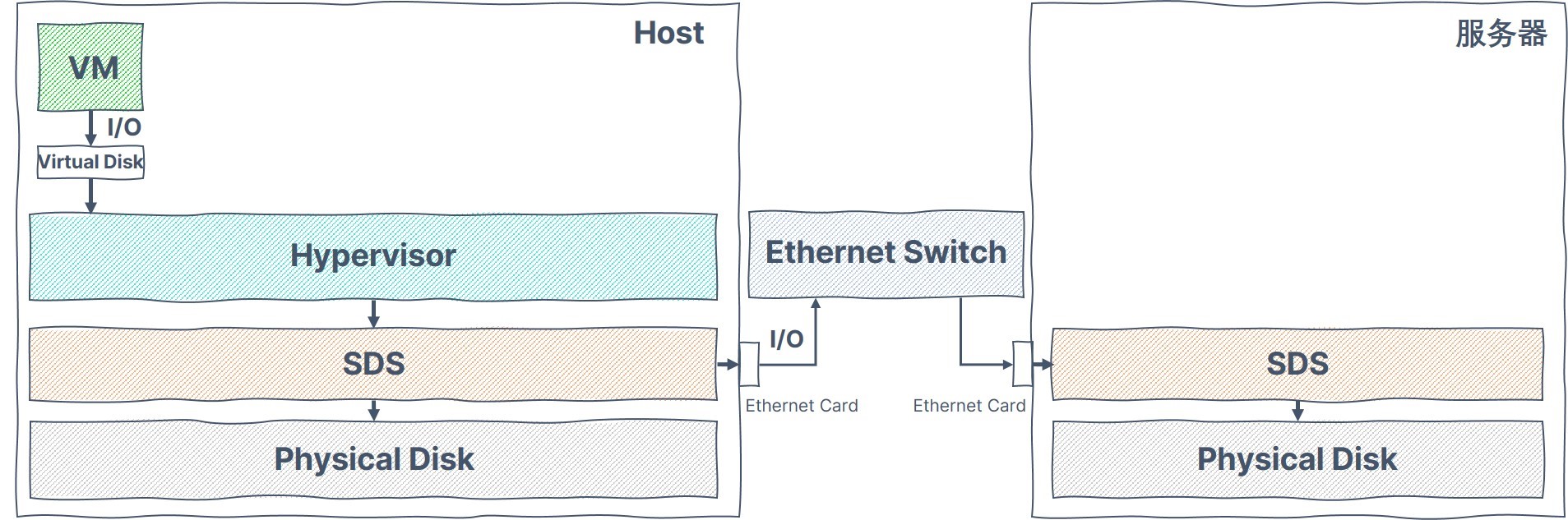

I/O Path in HCI

As HCI converges computing, network and storage, writes are routed from a VM through the hypervisor, and software-defined storage (SDS), before being stored on physical disks locally, or over the Ethernet.

The hardware devices and software logic involved in this process include:

- Hardware Devices: host/Ethernet switch…

- Software Logic: Guest OS/virtual disk/virtualization software/SDS…

In legacy virtualization (with SAN storage), VM data must pass through the SAN network before reaching the SAN storage. Namely, 100% of data reads and writes need to be traversed across the network. In contrast, VM I/O in HCI is written to disks via the built-in SDS mechanism which is based on a distributed architecture. So, I/O in HCI occurs both remotely (via the external network) and locally (on the local disk).

Local reads and writes, with the same hardware and software configurations, should have a shorter response time than remote I/O because network transmission inevitably increases I/O latency and overhead even if low-latency switches are popularly deployed.

I/O Path Comparison: VMware vs SmartX

I/O Path in vSAN

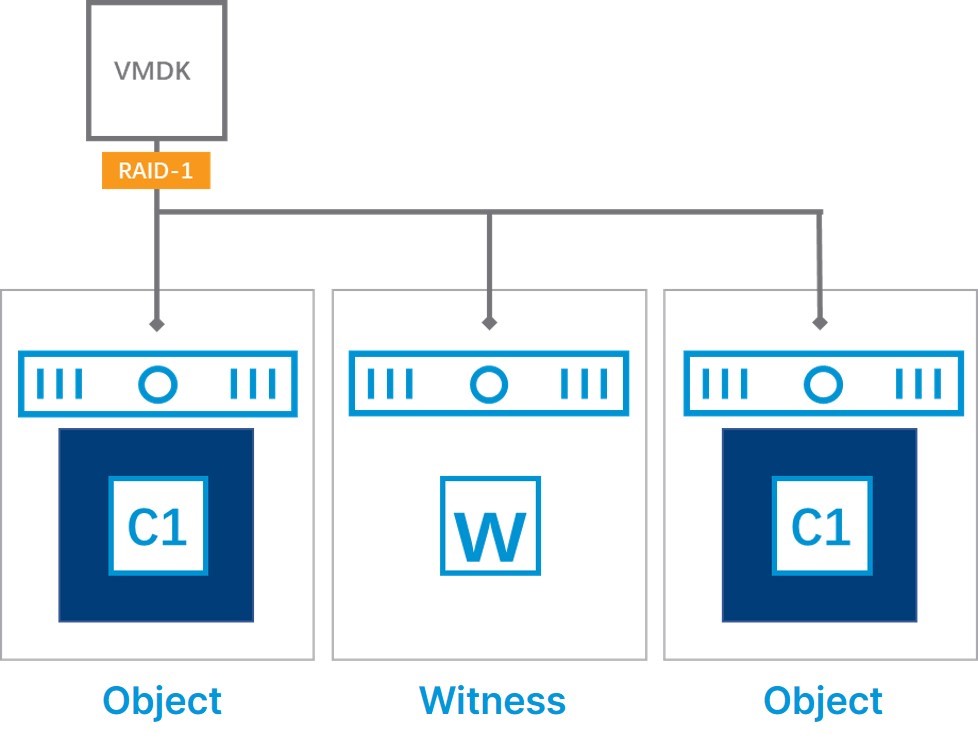

vSAN is the HCI storage software of VMware. It stores VM disk files (.vmdk files) as objects and provides a variety of data redundancy mechanisms, including FTT=1 (RAID1 Mirror/RAID5) and FTT=2 (RAID1 Mirror/RAID6). The following discussion is based on the commonly used FTT=1 (RAID1 Mirror). RAID5/6 is only applicable to all-flash clusters, whereas hybrid configuration is more common in HCI deployment.

When using FTT=1, 2 VM disk (.vmdk) replicas are created and placed on two different hosts. Whereas in vSAN, the default size of an object is 255GB with 1 stripe. So, if a VM creates a virtual disk of 200GB, vSAN will generate a set of mirrored components that includes an object and a replica that are distributed across two hosts. Although there is an additional witness component, we’ll leave it aside for the time being as it does not contain business data. If the virtual disk has a capacity larger than 255GB, it will be split into multiple components on a 255GB basis.

Write I/O Path

I/O Path under Normal State

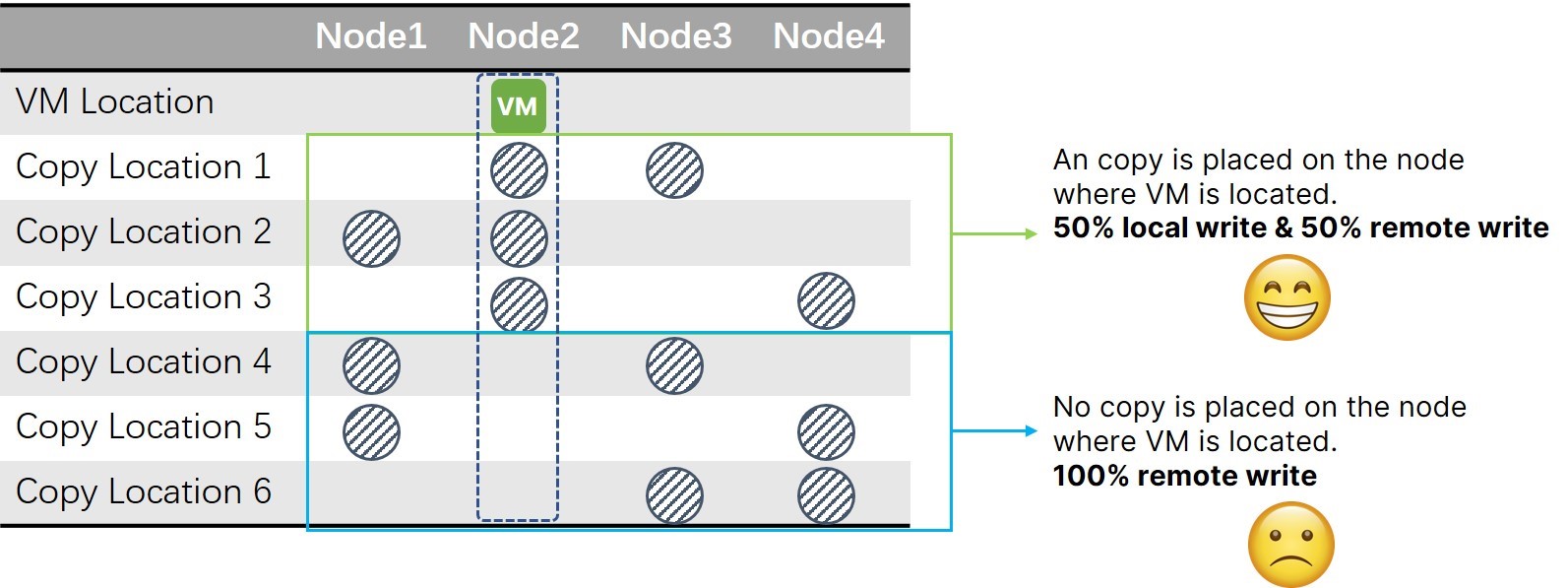

Instead of using local-preference-strategy, vSAN distributes components to local and remote nodes randomly. Let’s look at vSAN’s write I/O using a 4-node cluster as an example.

For a 4-node cluster, there are 6 possible ways to place an object and its replica, with three remote cases (50% chance), which means two copies must be written over the network, and the others being hybrid (with one copy placed locally and another remotely). In other words, even in the best-case scenario, at least one copy is inevitably written over the network in vSAN.

Read I/O Path

I/O Path under Normal State

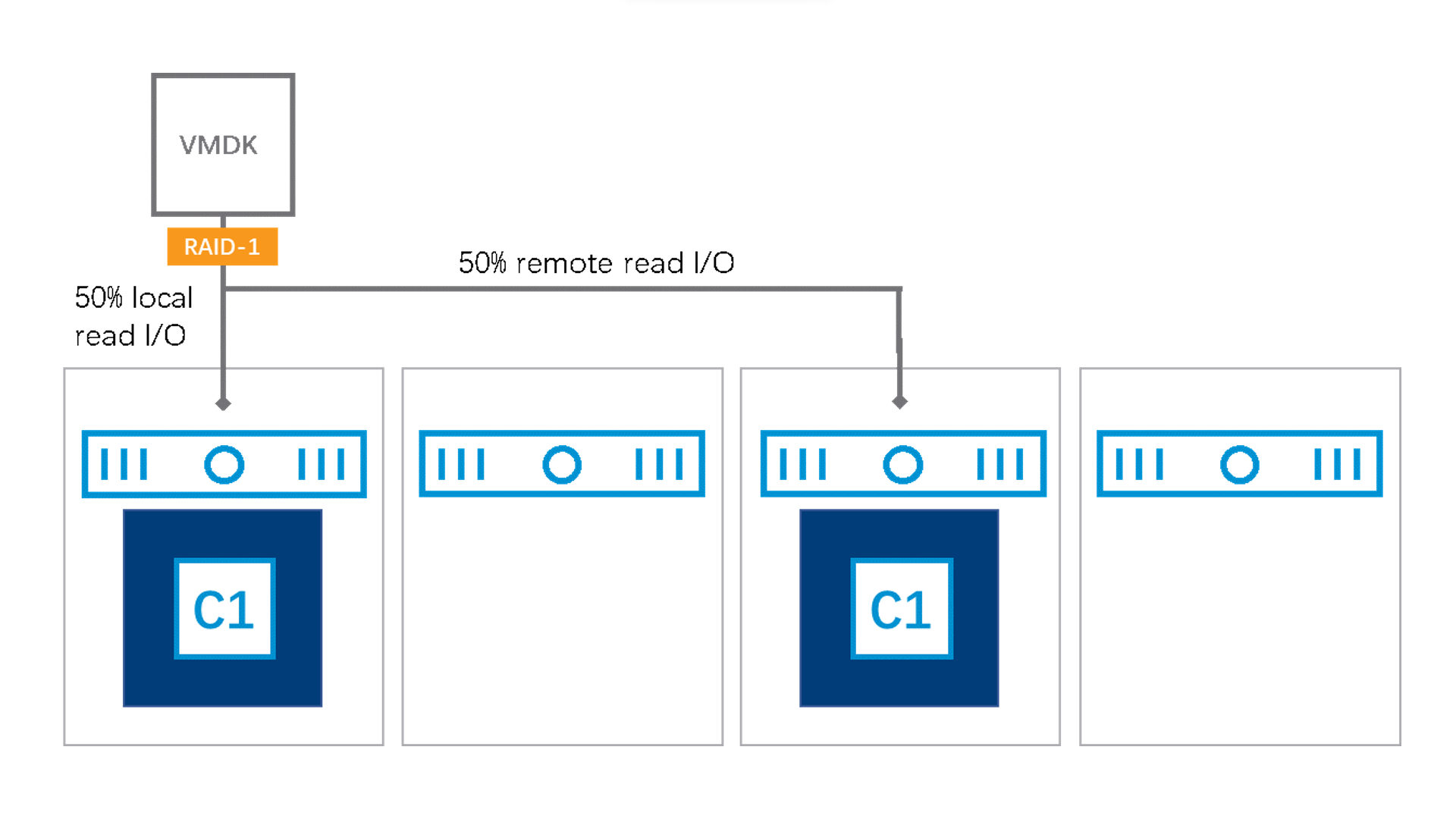

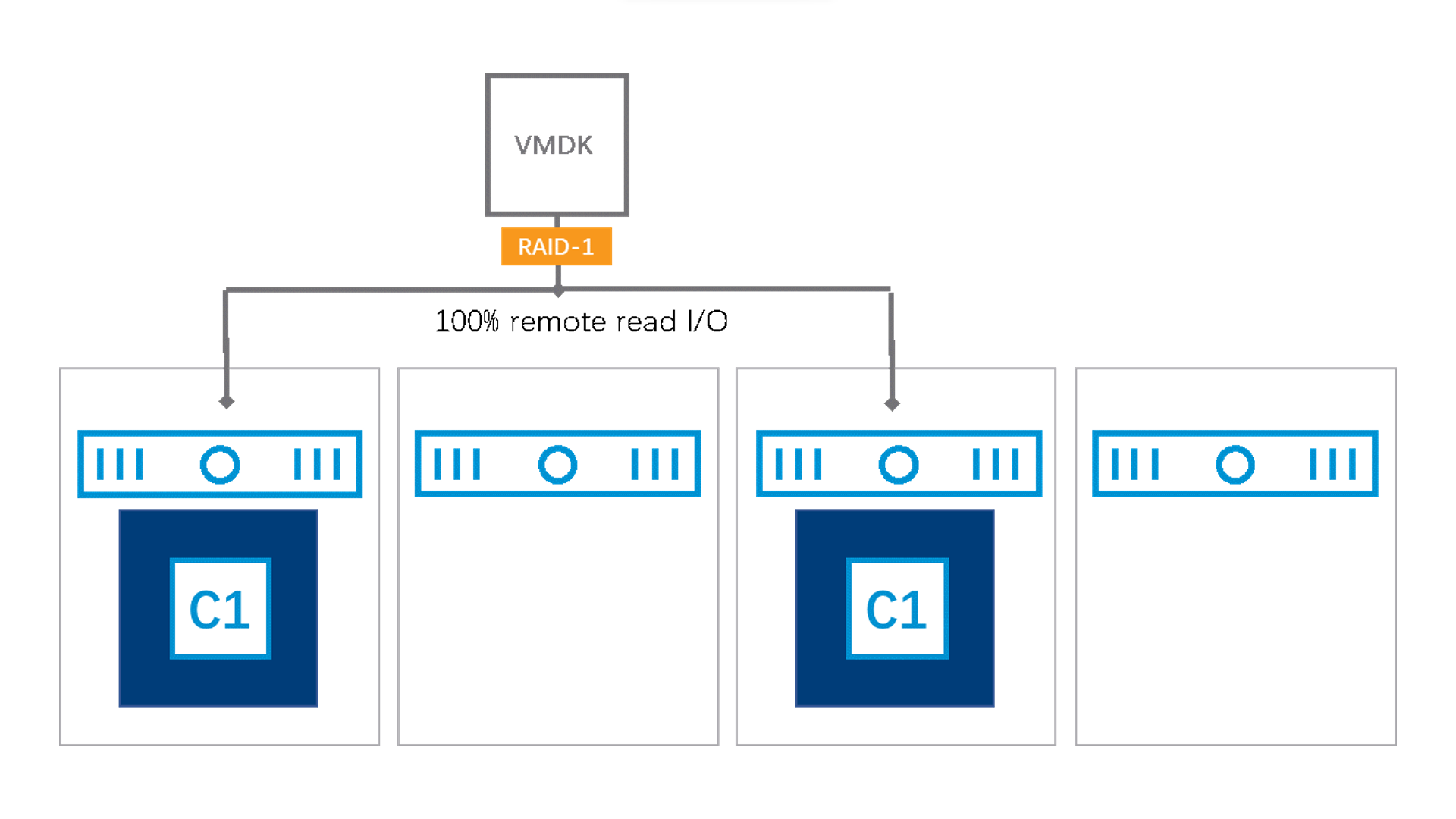

According to the technical data disclosed by VMware World1, vSAN’s read I/O follows 3 principles:

- Readings between replicas are done in a load-balance way.

- Non-inevitable local reads (if only one copy is left).

- Ensuring that the same data block is read from the same replica.

Because of vSAN’s across-replica load balance, even if the host where the VM resides has a replica (the probability of the VM having a local replica is 50%, according to Figure 4), 50% of the reading will still be done over the network. Furthermore, there is a 50% probability that the node where the VM is located has no replica and has to read all data from remote replicas. In short, under normal conditions, vSAN will not read necessarily from local replicas.

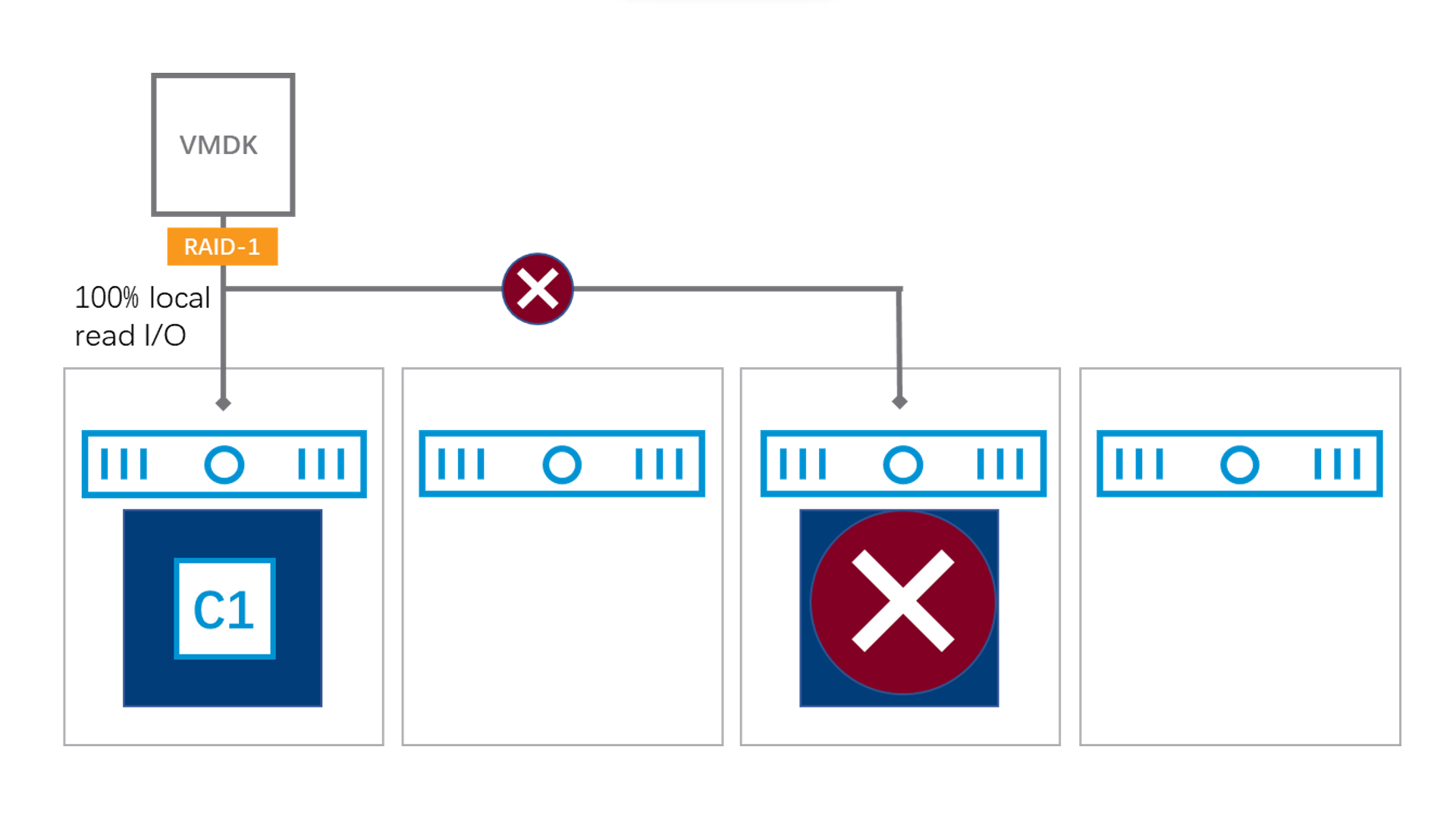

I/O Path in Downtime

When a replica fails in a cluster, all read I/O will go to the same replica because as the other one is lost, balanced reading will no longer be possible.

In this scenario, there is a 25% probability of reading all data locally, which is a shorter I/O path, and a 75% probability of reading all data remotely.

In addition, in the event of a hard disk failure, data repair is conducted by reading the only available replica. The default size of the component (255GB) and the number of stripe (1) in vSAN result in the centralization of the component onto one or two hard disks, making it difficult to perform tensive recovery. This is why VMware suggests users configure more stripes in storage policy to avoid the performance bottleneck of a single hard disk.

I/O Path in ZBS

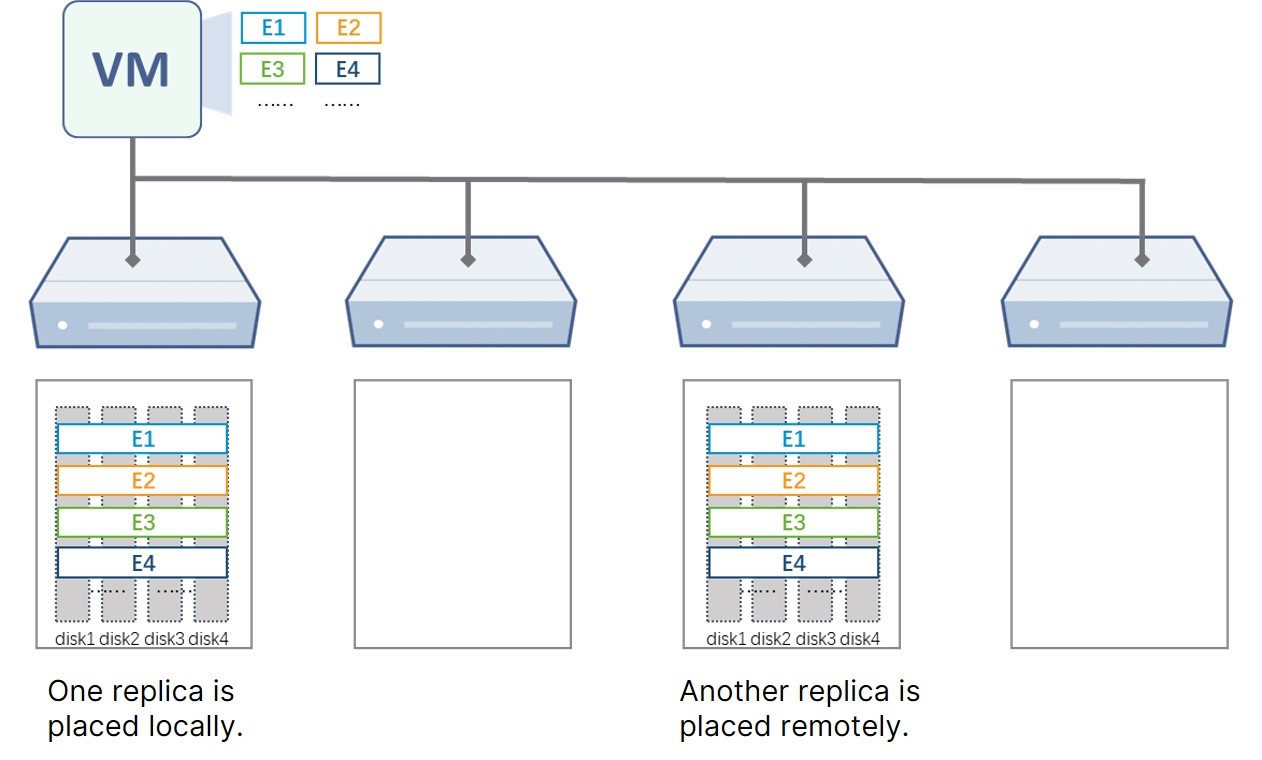

SmartX’s distributed block storage ZBS slices the virtual disk into multiple data blocks (extents), and enhances data redundancy with 2-replica and 3-replica strategies. Specifically, the 2-replica strategy has the same efficacy as vSAN FTT=1 (RAID1 Mirror). Therefore, we analyze the I/O path in ZBS based on the 2-replica strategy.

With the 2-replica strategy, in ZBS the virtual disk consists of multiple extents (256MB in size) that exist as a set of mirrors. The default number of stripes is 4. Particularly, ZBS features data locality which allows a precise control of replicas’ locations; while one full replica is placed on the host where the VM is located, another is placed remotely.

Write I/O Path

I/O Path under Normal State

Again, we take a 4-node cluster as an example. Since ZBS guarantees there will always be one replica on the VM’s node, it is certain that 50% writes occur locally and 50% remotely, regardless of where the other replica is placed. As a result, there is no 100% remote write in ZBS in this scenario.

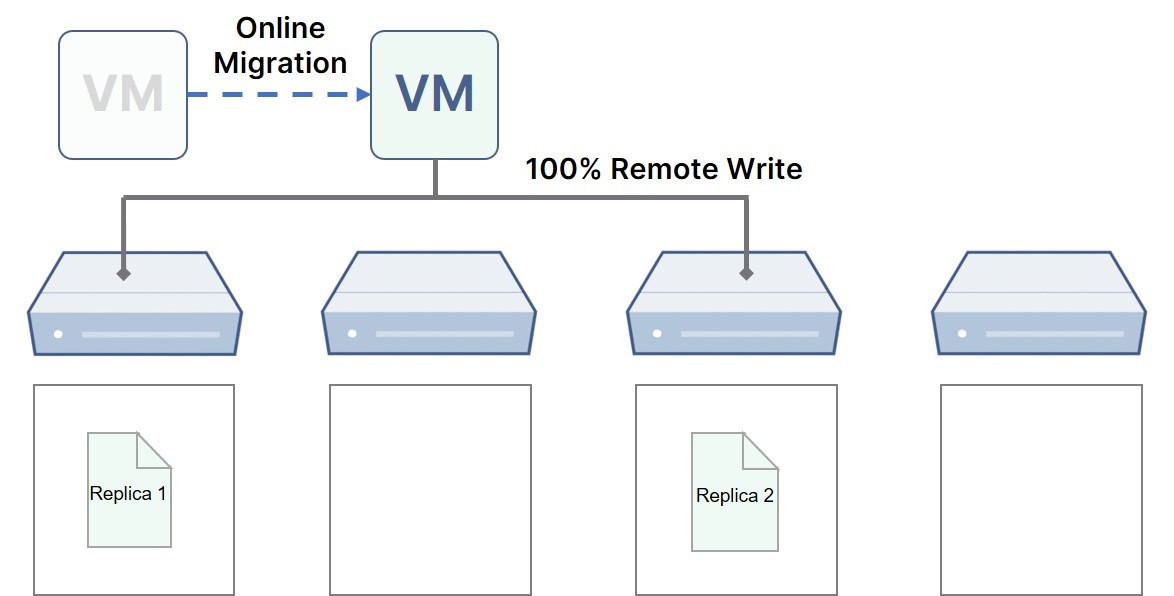

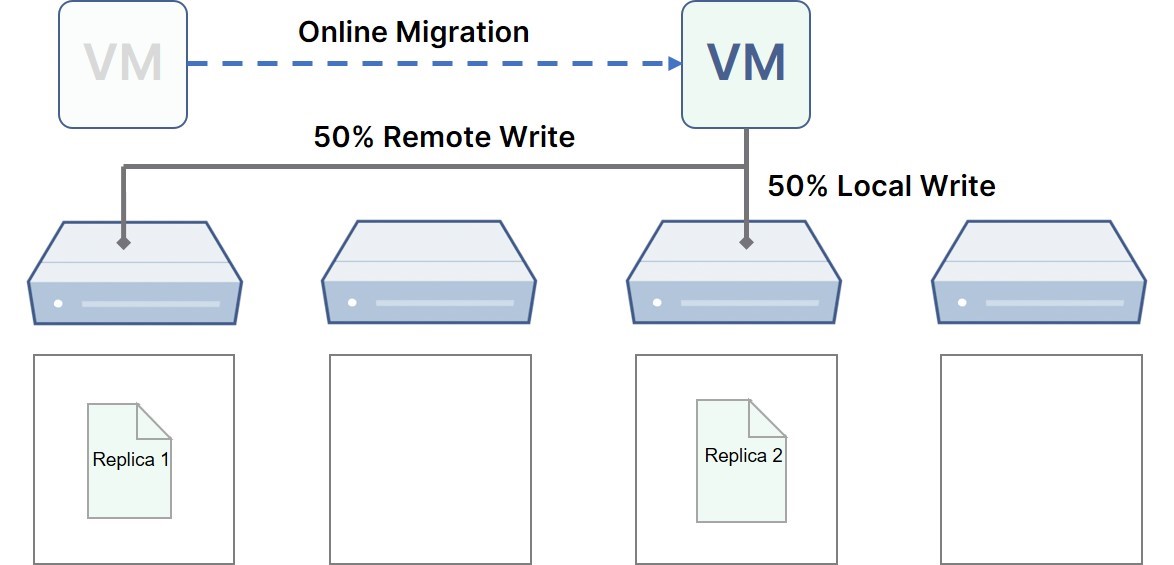

I/O Path Before and After VM Migration

ZBS data locality can efficiently reduce I/O access latency as one replica is stored on the local host. But what will happen if the VM is migrated? In general, there are two possible scenarios after VM migration.

Scenario 1: after migration, neither replica is placed locally, which indicates the 100% remote write (66.6% probability).

Scenario 2: after migration, VM moves to where one replica is located, which indicates the 50% remote write (33.3% probability).

As shown above, it is more likely that I/O is entirely written remotely. To address this issue, ZBS optimizes I/O paths; during VM migration, newly written data is directly stored on the new local node, and the corresponding replica is moved to the new node after 6 hours. Therefore, a new data locality for the migrated VM is achieved. It also solves the problem of post-migration remote reads.

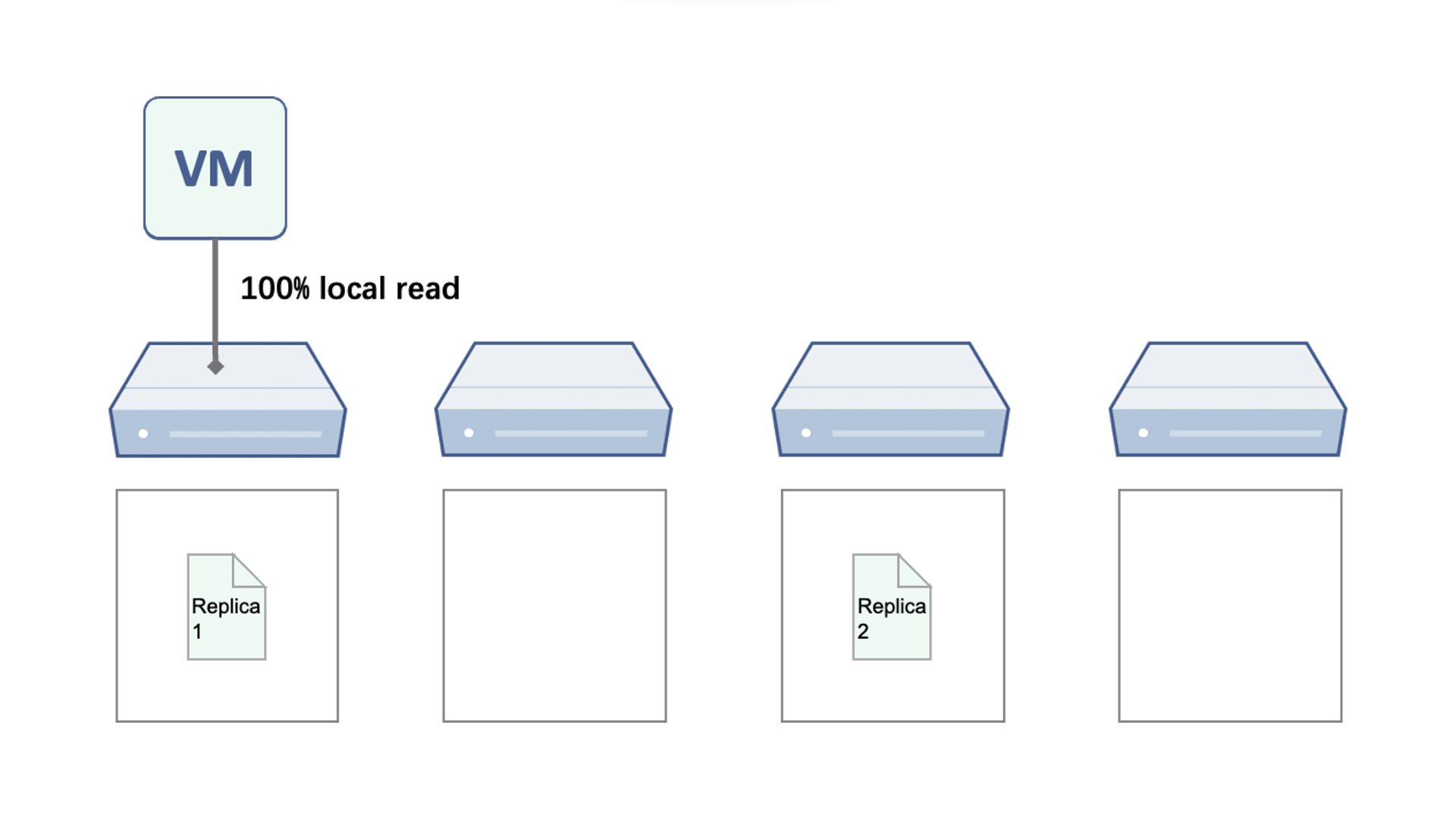

Read I/O Path

I/O Path under Normal State

Owing to data locality, in ZBS I/O is always read from the local host.

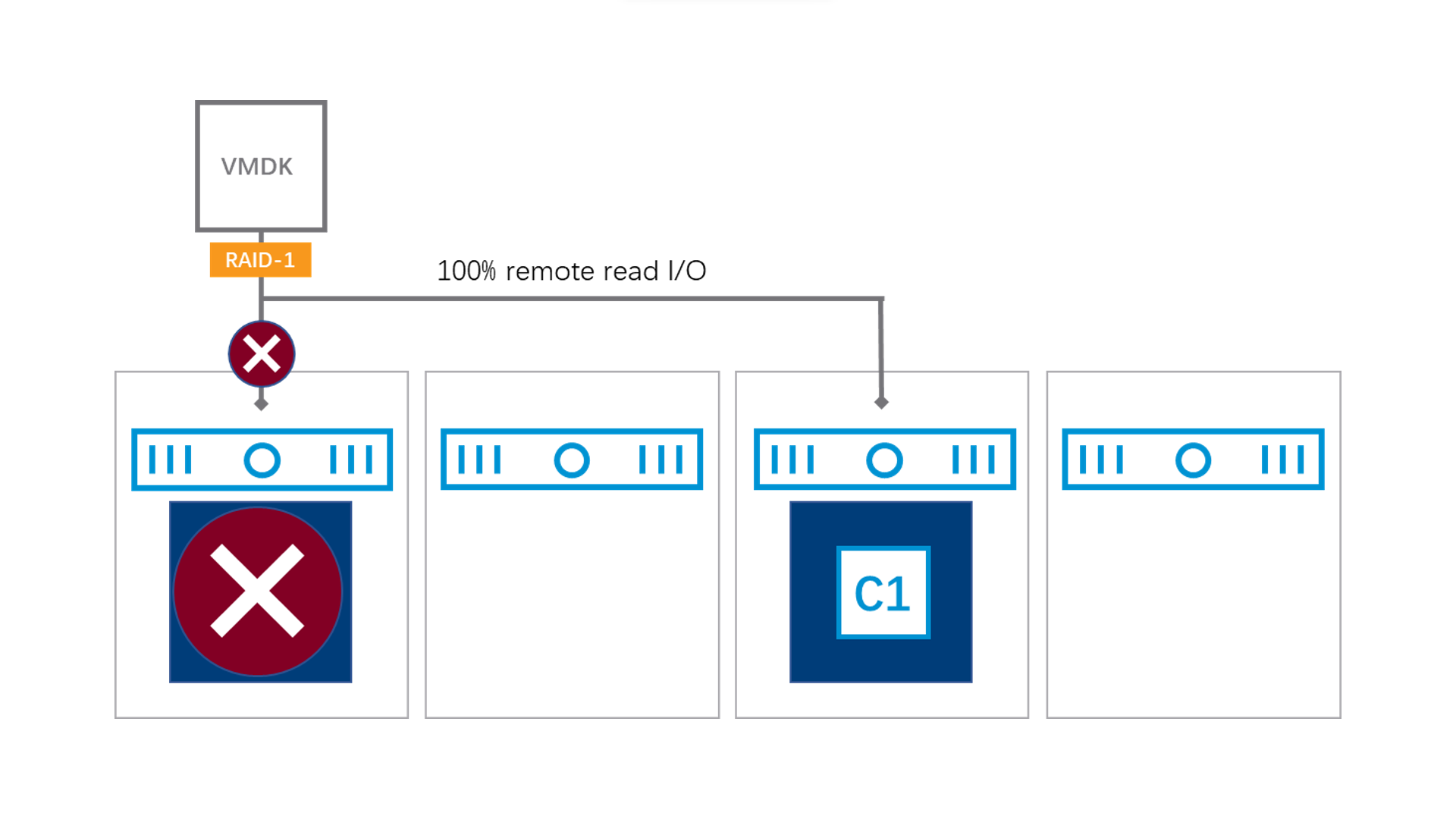

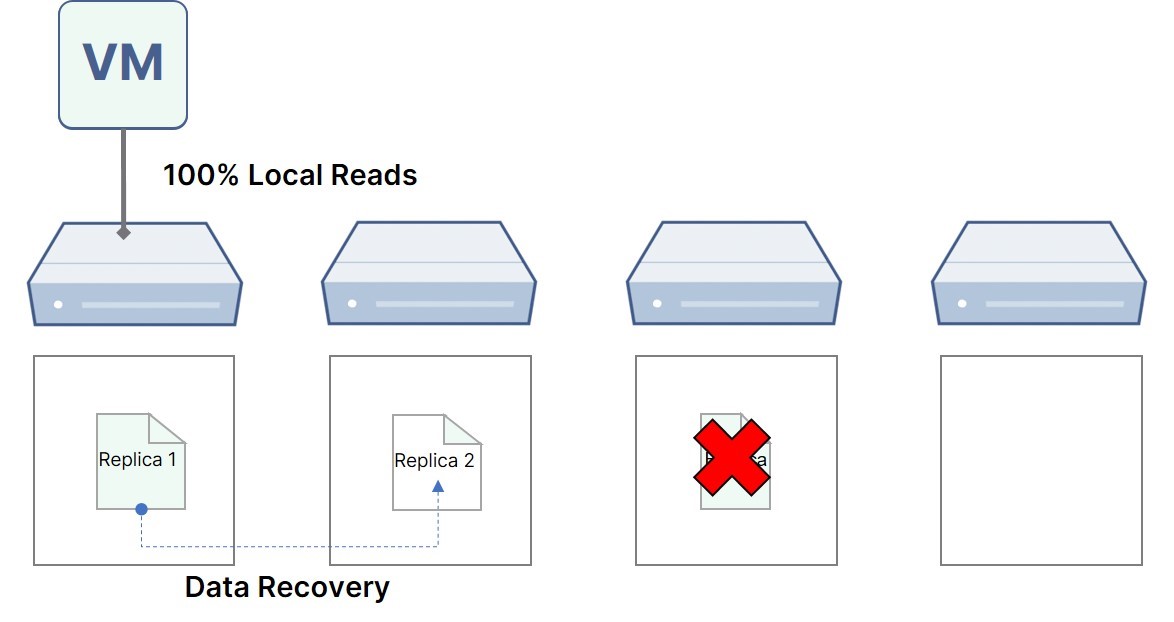

I/O Path under Data Recovery

Scenario 1: on the node where the VM is located, a hard disk failure occurs.

I/O access is quickly switched from local to remote node to keep the storage service as usual. While the data recovery is triggered, a local replica will be rebuilt to resume the local I/O access.

Scenario 2: a hard disk failure occurs at the remote node.

Reading I/O locally while recovering data by copying available replicas from local to remote node.

However, during this process, as the single available replica has to handle application I/O and data recovery at the same time, will it put too much pressure on the storage system? ZBS is also optimized to balance the I/O stream:

- ZBS has smaller data blocks (256MB vs 255GB) and more stripes than vSAN. This indicates ZBS’ greater capability in utilizing multiple hard disks to read/write data from/to distributed locations. This design not only effectively improves data recovery speed but also avoids the performance bottleneck of an individual hard disk.

- Built-in Automatic Data Recovery Policy: SmartX independently-developed storage engine can automatically monitor application I/O and data recovery/migration I/O. It will prioritize application’s I/O performance and elastically adjust the speed of data recovery/migration to avoid I/O overhead.

Conclusion

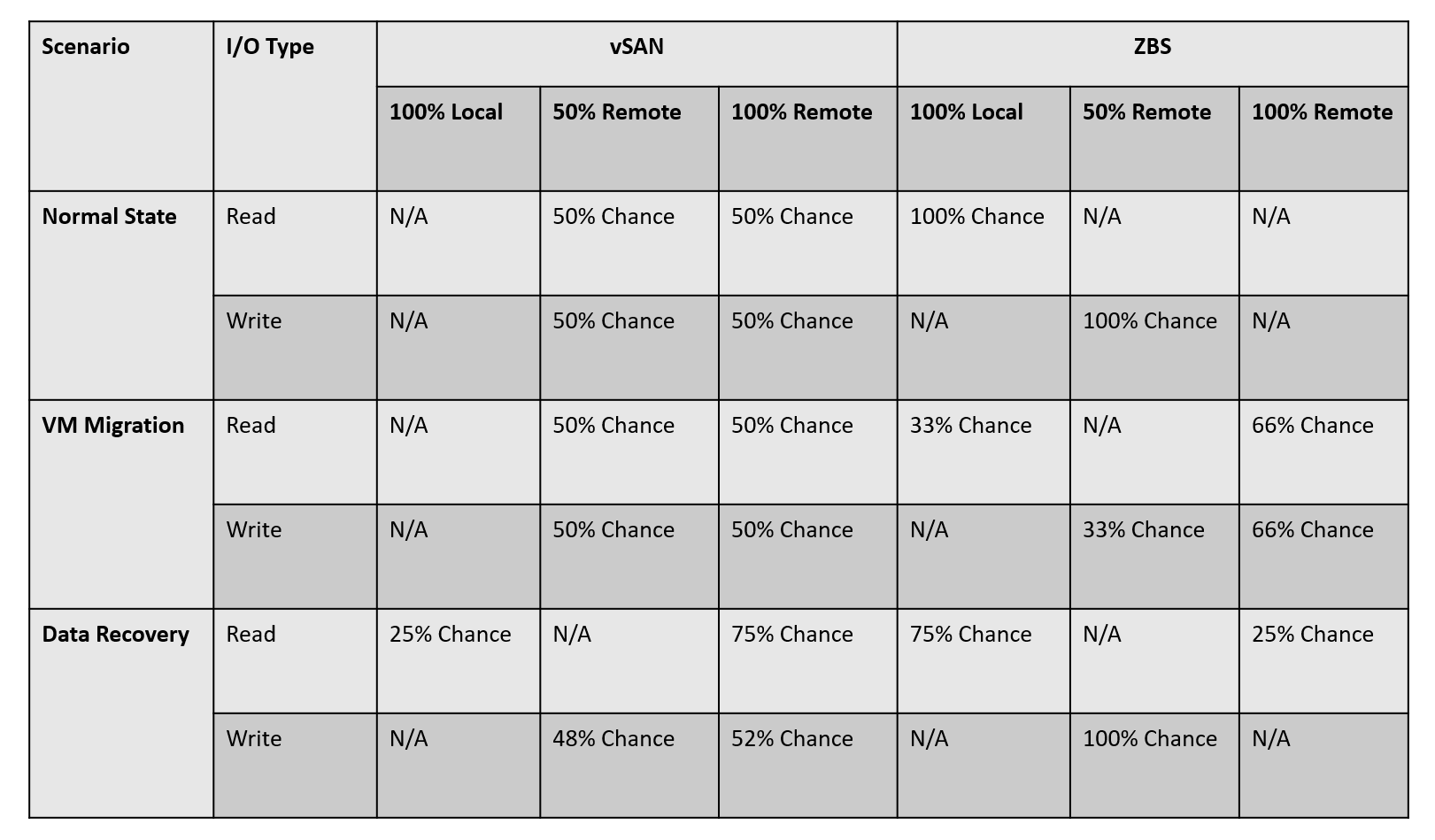

Based on the preceding discussion, we summarize vSAN and ZBS I/O paths in various scenarios in the form below. In terms of low latency, 100% local access is the most preferred, followed by 50% remote access and 100% remote access.

Under the normal state and data recovery scenario, ZBS has a higher probability of local I/O and a theoretically lower latency than vSAN. vSAN, though, performs remote I/O frequently at normal state, it provides better I/O paths for VM migration. ZBS’ low probability of local I/O during the VM migration will be improved once the new data locality is complete (it takes at least a few hours).

In short, ZBS shows a clear advantage if VM online migration is not frequently required (not that every a few hours). Otherwise, vSAN would be more preferable, although frequent VM online migrations are not common in the production environment.

Other Impacts of I/O Path on Clusters

Regarding the I/O path’s impacts on cluster performance, you may also have wonders as such: if total I/O access of Apps in a HCI cluster is quite low, will the I/O path design still affect cluster performance? Do we need to pursue local I/O reads and writes?

The answer is YES. Because remote I/O access not only increases latency but also takes up additional network resources. While under the normal state these network overheads may not cause heavy stress, the impact can be significant in scenarios such as:

- High-Density Deployment of VM

As the probability of remote I/O increases, the density of deployed VMs and remote I/O traffic also surges, ending up with failing to meet the SLA requirements for VMs.

- Data Balance

When cluster capacity utilization is high, the system typically triggers data balance (i.e., performing data migration). This usually involves data replication between hosts and adds heavy pressure to network bandwidth. As a result, data balance and remote I/O of VMs tend to cause network resource contention, which extends the time to complete data balance, and even induces unnecessary risks.

- Data Recovery

Data recovery is similar to data balance in that it relies on the network to complete data replication. The difference is, data recovery requires more network bandwidth and has a greater impact on business. In the event of resource contention, prolonged data recovery may cause greater risks.

After all, users may end up bearing additional costs (e.g., switching to a 25G network) to avoid network resource contention issues. This may not be a desirable solution.

For more information on the features and performance of VMware and SmartX HCI, check out the following blogs: